|

Jerry Zhi-Yang He,

Daniel S. Brown,

Zackory Erickson,

and Anca Dragan

CoRL, 2023

[PDF]

[Bibtex]

[Abstract]

@inproceedings{he2023quantifying,

title={Quantifying Assistive Robustness Via the Natural-Adversarial Frontier},

author={He, Jerry Zhi-Yang and Brown, Daniel S and Erickson, Zackory and Dragan, Anca},

booktitle={7th Annual Conference on Robot Learning},

year={2023}

}

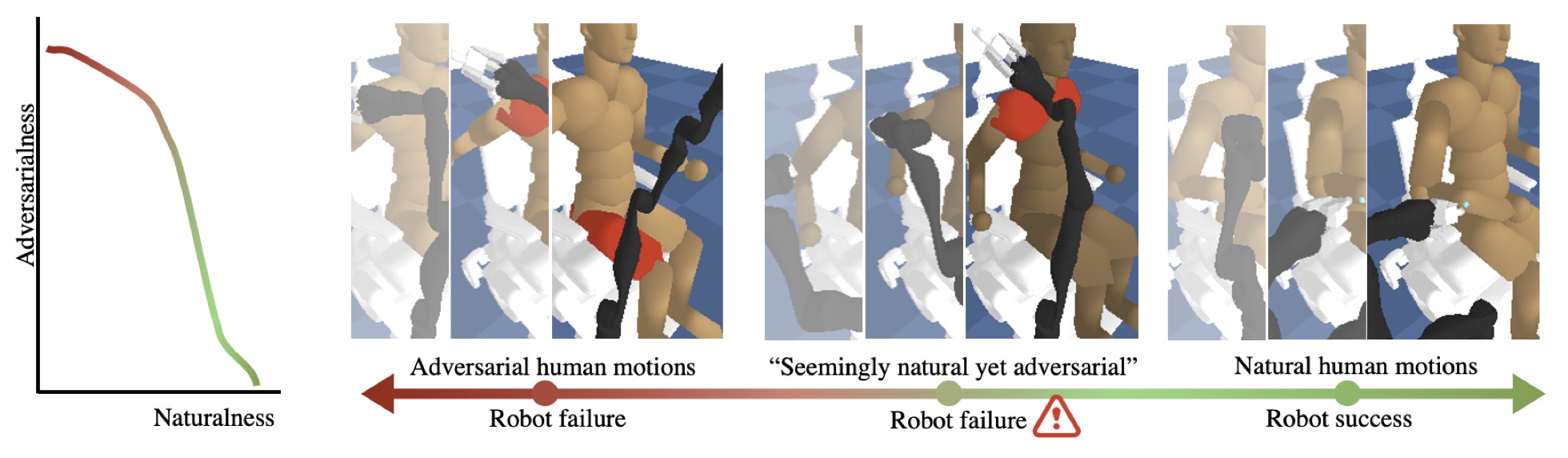

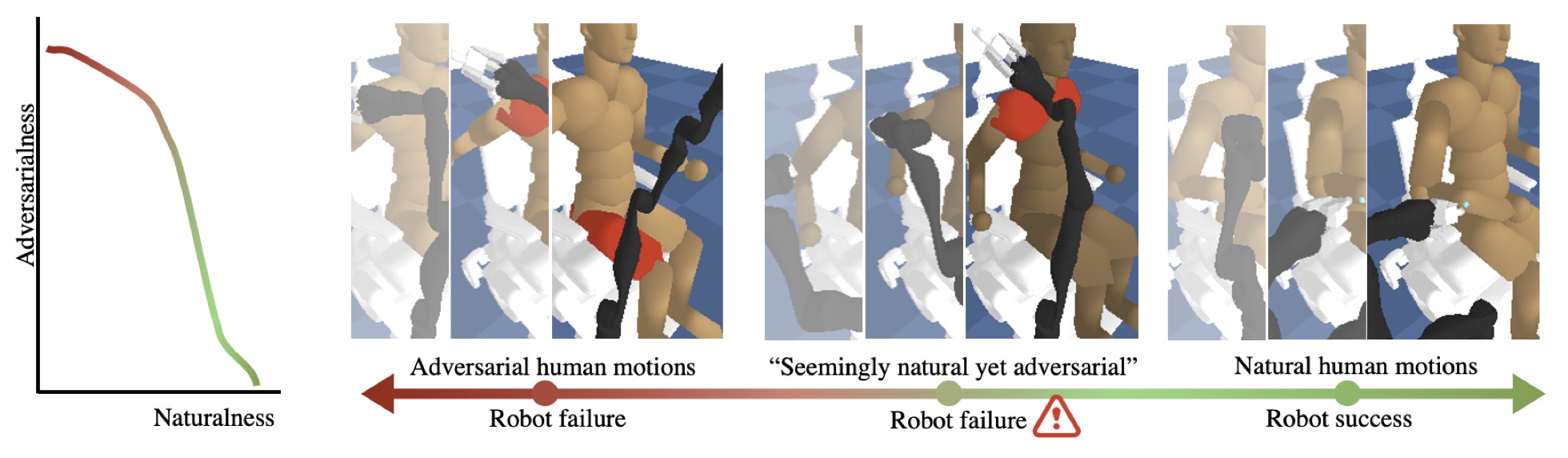

Our ultimate goal is to build robust policies for robots that assist people. What makes this hard is that people can behave unexpectedly at test time, potentially interacting with the robot outside its training distribution and leading to failures. Even just measuring robustness is a challenge. Adversarial perturbations are the default, but they can paint the wrong picture: they can correspond to human motions that are unlikely to occur during natural interactions with people. A robot policy might fail under small adversarial perturbations but work under large natural perturbations. We propose that capturing robustness in these interactive settings requires constructing and analyzing the entire natural-adversarial frontier: the Pareto-frontier of human policies that are the best trade-offs between naturalness and low robot performance. We introduce RIGID, a method for constructing this frontier by training adversarial human policies that trade off between minimizing robot reward and acting human-like (as measured by a discriminator). On an Assistive Gym task, we use RIGID to analyze the performance of standard collaborative RL, as well as the performance of existing methods meant to increase robustness. We also compare the frontier RIGID identifies with the failures identified in expert adversarial interaction, and with naturally-occurring failures during user interaction. Overall, we find evidence that RIGID can provide a meaningful measure of robustness predictive of deployment performance, and uncover failure cases in human-robot interaction that are difficult to find manually.

|

|

Akhil Padmanabha,

Sonal Choudhary,

Carmel Majidi*,

and Zackory Erickson*

Nature Communications Medicine, 2023

[PDF]

[Code]

[Bibtex]

[Abstract]

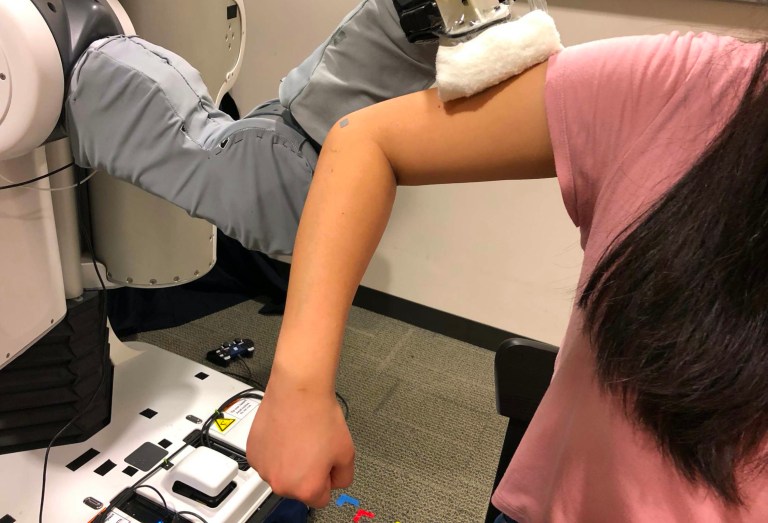

@article{padmanabha2023multimodal,

title={A Multimodal Sensing Ring for Quantification of Scratch Intensity},

author={Padmanabha, Akhil and Choudhary, Sonal and Majidi, Carmel and Erickson, Zackory},

journal={Nature Communications Medicine},

year={2023},

organization={Nature}

}

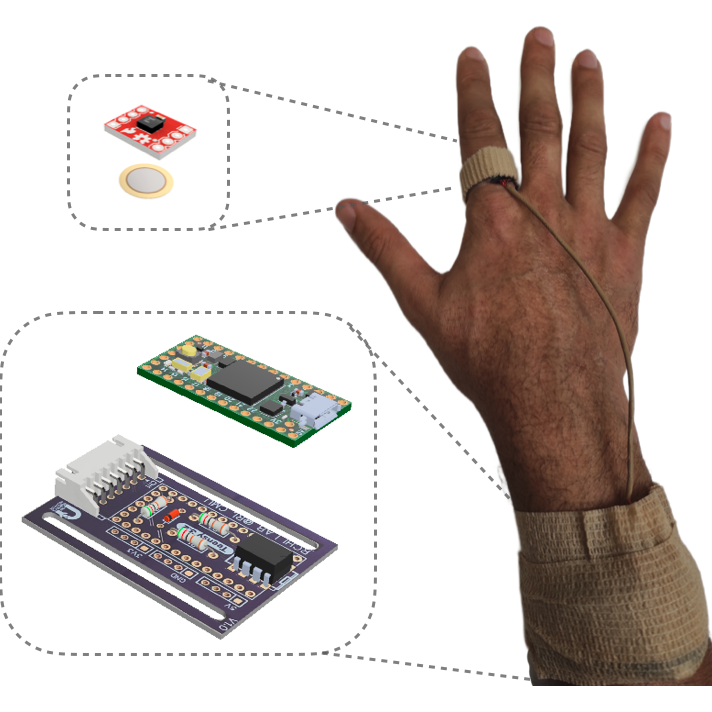

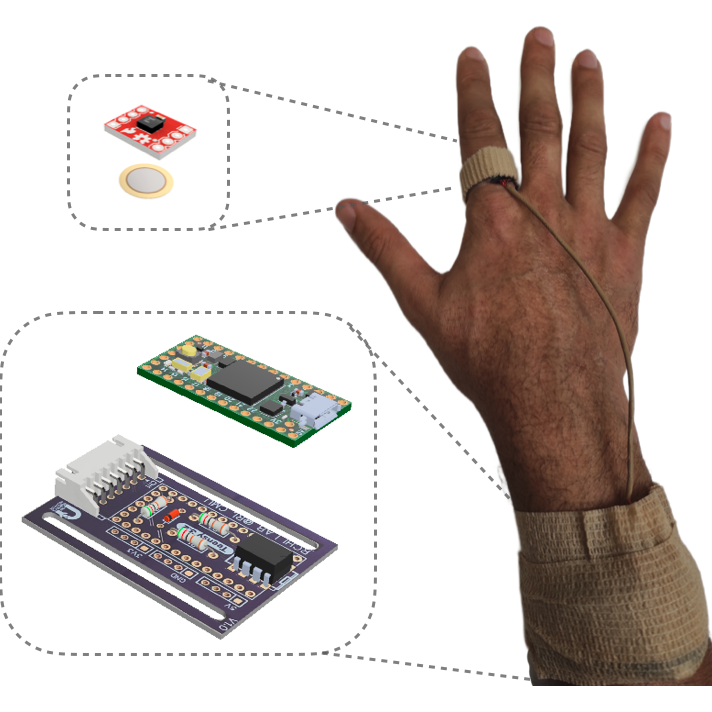

An objective measurement of chronic itch is necessary for improvements in patient care for numerous medical conditions. While wearables have shown promise for scratch detection, they are currently unable to estimate scratch intensity, preventing a comprehensive understanding of the effect of itch on an individual. In this work, we present a framework for the estimation of scratch intensity in addition to the detection of scratch. This is accomplished with a multimodal ring device, consisting of an accelerometer and a contact microphone, a pressure sensitive tablet for capturing ground truth intensity values, and machine learning algorithms for regression of scratch intensity on a 0-600 milliwatts (mW) power scale that can be mapped to a 0-10 continuous scale. We evaluate the performance of our algorithms on 20 individuals using Leave One Subject Out Cross Validation and using data from 14 additional participants, we show that our algorithms achieve clinically-relevant discrimination of scratching intensity levels. By doing so, our device enables the quantification of the substantial variations in the interpretation of the 0-10 scale frequently utilized in patient self-reported clinical assessments. This work demonstrates that a finger-worn device can provide multidimensional, objective, real-time measures for the action of scratching.

|

|

Kavya Puthuveetil,

Sasha Wald,

Atharva Pusalkar,

Pratyusha Karnati,

and Zackory Erickson

IEEE Robotics and Automation Letters (RA-L), 2023

[PDF]

[Project Page]

[Bibtex]

[Abstract]

@article{puthuveetil2023robust,

title={Robust Body Exposure (RoBE): A Graph-based Dynamics Modeling Approach to Manipulating Blankets over People},

author={Puthuveetil, Kavya and Wald, Sasha and Pusalkar, Atharva and Karnati, Pratyusha and Erickson, Zackory},

journal={IEEE Robotics and Automation Letters},

year={2023},

organization={IEEE}

}

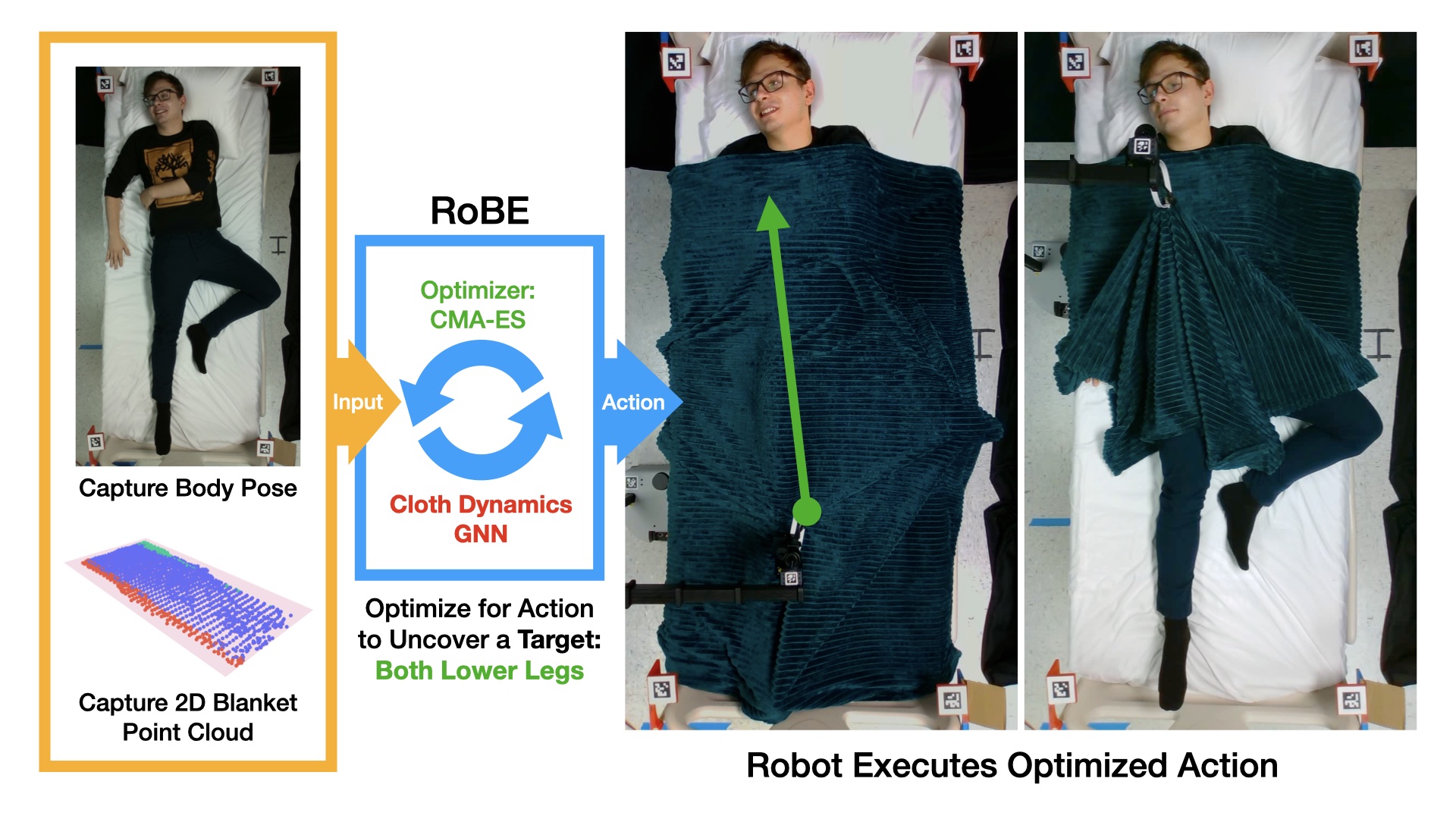

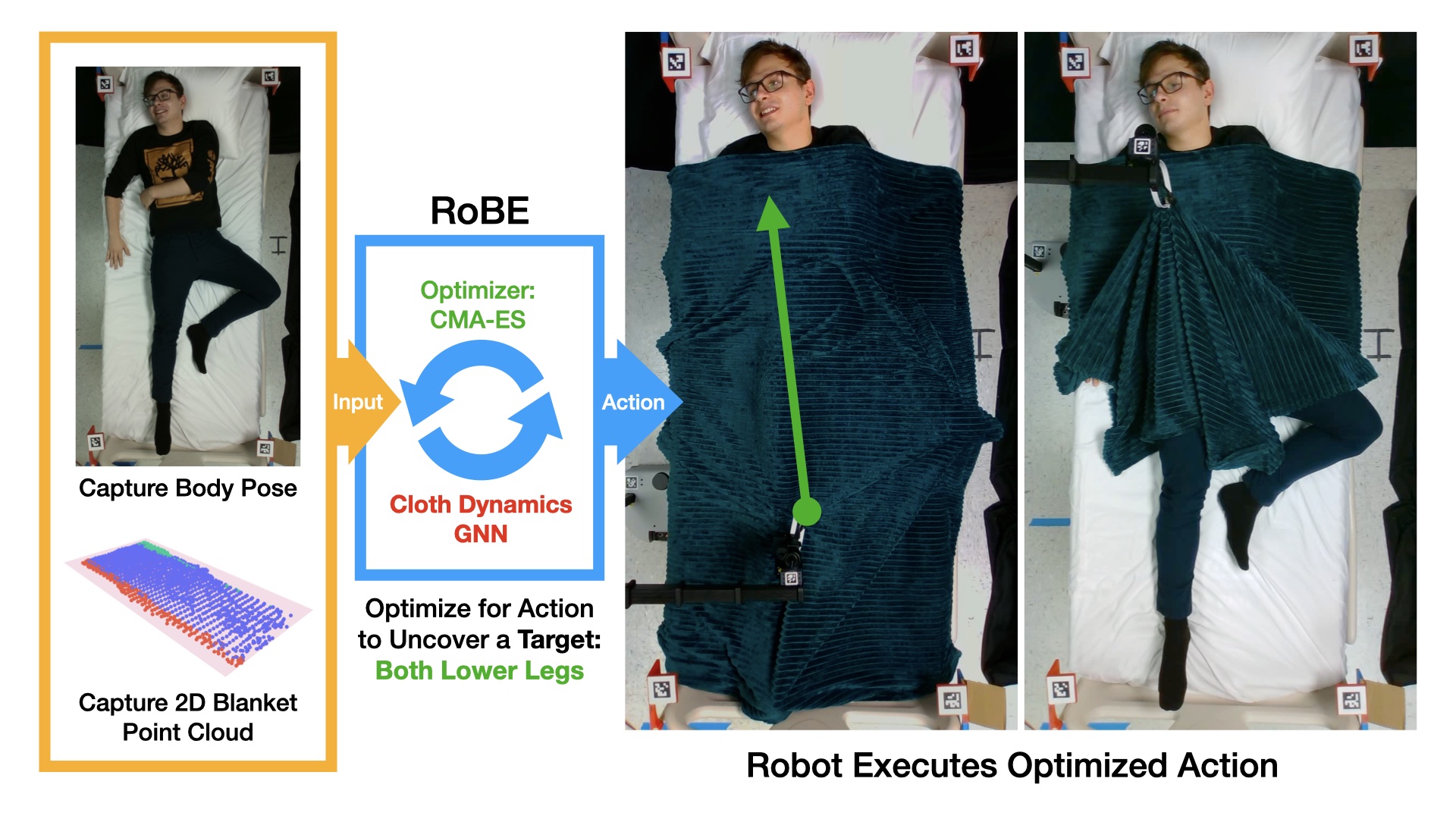

Robotic caregivers could potentially improve the quality of life of many who require physical assistance. However, in order to assist individuals who are lying in bed, robots must be capable of dealing with a significant obstacle: the blanket or sheet that will almost always cover the person's body. We propose a method for targeted bedding manipulation over people lying supine in bed where we first learn a model of the cloth's dynamics. Then, we optimize over this model to uncover a given target limb using information about human body shape and pose that only needs to be provided at run-time. We show how this approach enables greater robustness to variation relative to geometric and reinforcement learning baselines via a number of generalization evaluations in simulation and in the real world. We further evaluate our approach in a human study with 12 participants where we demonstrate that a mobile manipulator can adapt to real variation in human body shape, size, pose, and blanket configuration to uncover target body parts without exposing the rest of the body. Source code and supplementary materials are available online.

|

|

Yufei Wang,

Zhanyi Sun,

Zackory Erickson*,

David Held*

RSS, 2023

[Project Page]

[Bibtex]

[Abstract]

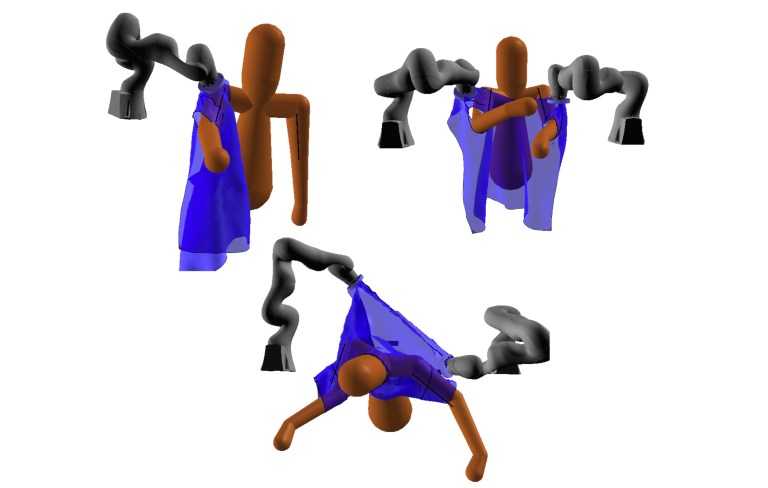

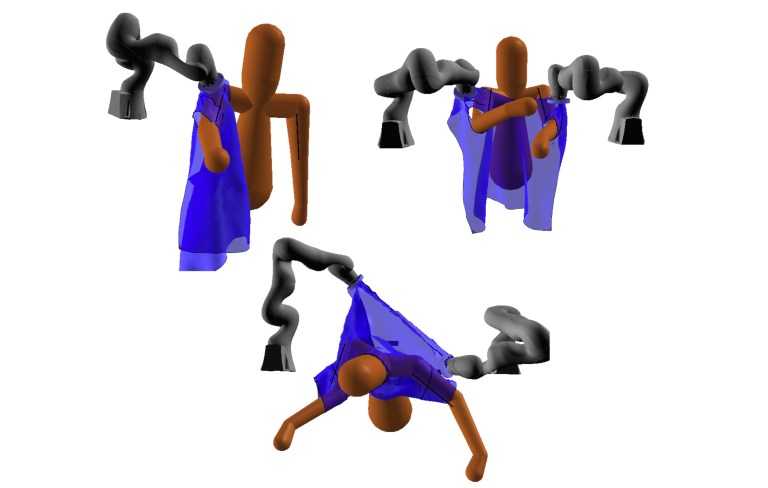

@inproceedings{wang2023one,

title={One Policy to Dress Them All: Learning to Dress People with Diverse Poses and Garments},

author={Wang, Yufei and Sun, Zhanyi and Erickson, Zackory and Held, David},

booktitle={2023 Robotics: Science and Systems (RSS)},

year={2023}

}

Robot-assisted dressing could benefit the lives of many people such as older adults and individuals with disabilities. Despite such potential, robot-assisted dressing remains a challenging task for robotics as it involves complex manipulation of deformable cloth in 3D space. Many prior works aim to solve the robot-assisted dressing task, but they make certain assumptions such as a fixed garment and a fixed arm pose that limit their ability to generalize. In this work, we develop a robot-assisted dressing system that is able to dress different garments on people with diverse poses from partial point cloud observations, based on a learned policy. We show that with proper design of the policy architecture and Q function, reinforcement learning (RL) can be used to learn effective policies with partial point cloud observations that work well for dressing diverse garments. We further leverage policy distillation to combine multiple policies trained on different ranges of human arm poses into a single policy that works over a wide range of different arm poses. We conduct comprehensive real-world evaluations of our system with 510 dressing trials in a human study with 17 participants with different arm poses and dressed garments. Our system is able to dress 86% of the length of the participants arms on average. Videos can be found on the anonymized project webpage: https://sites.google.com/view/one-policy-dress.

|

|

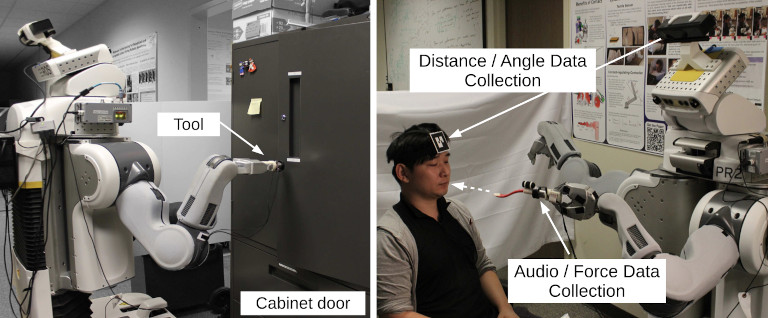

Akhil Padmanabha*,

Qin Wang*,

Daphne Han,

Jashkumar Diyora,

Kriti Kacker,

Hamza Khalid,

Liang-Jung Chen,

Carmel Majidi,

and Zackory Erickson

ICRA, 2023

[PDF]

[Project Page]

[Bibtex]

[Abstract]

@inproceedings{padmanabha2023hatteleop,

title={HAT: Head-Worn Assistive Teleoperation of Mobile Manipulators},

author={Padmanabha, Akhil and Wang, Qin and Han, Daphne and Diyora, Jashkumar and Kacker, Kriti and Khalid, Hamza and Chen, Liang-Jung and Majidi, Carmel and Erickson, Zackory},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

year={2023},

organization={IEEE}

}

Mobile manipulators in the home can provide increased autonomy to individuals with severe motor impairments, who often cannot complete activities of daily living (ADLs) without the help of a caregiver. Teleoperation of an assistive mobile manipulator could enable an individual with motor impairments to independently perform self-care and household tasks, yet limited motor function can impede one's ability to interface with a robot. In this work, we present a unique inertial-based wearable assistive interface, embedded in a familiar head-worn garment, for individuals with severe motor impairments to teleoperate and perform physical tasks with a mobile manipulator. We evaluate this wearable interface with both able-bodied (N = 16) and individuals with motor impairments (N = 2) for performing ADLs and everyday household tasks. Our results show that the wearable interface enabled participants to complete physical tasks with low error rates, high perceived ease of use, and low workload measures. Overall, this inertial-based wearable serves as a new assistive interface option for control of mobile manipulators in the home.

|

|

Nathaniel Hanson*,

Wesley Lewis*,

Kavya Puthuveetil,

Donelle Furline Jr,

Akhil Padmanabha,

Taskin Padir,

Zackory Erickson

ICRA, 2023

[PDF]

[Project Page]

[Code]

[Bibtex]

[Abstract]

@inproceedings{hanson2023slurp,

title={SLURP! Spectroscopy of Liquids Using Robot Pre-Touch Sensing},

author={Hanson, Nathaniel and Lewis, Wesley and Puthuveetil, Kavya and Furline, Donelle and Padmanabha, Akhil and Pad{\i}r, Ta{\c{s}}k{\i}n and Erickson, Zackory},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

year={2023},

organization={IEEE}

}

Liquids and granular media are pervasive throughout human environments. Their free-flowing nature causes people to constrain them into containers. We do so with thousands of different types of containers made out of different materials with varying sizes, shapes, and colors. In this work, we present a state-of-the-art sensing technique for robots to perceive what liquid is inside of an unknown container. We do so by integrating Visible to Near Infrared (VNIR) reflectance spectroscopy into a robot's end effector. We introduce a hierarchical model for inferring the material classes of both containers and internal contents given spectral measurements from two integrated spectrometers. To train these inference models, we capture and open source a dataset of spectral measurements from over 180 different combinations of containers and liquids. Our technique demonstrates over 85% accuracy in identifying 13 different liquids and granular media contained within 13 different containers. The sensitivity of our spectral readings allow our model to also identify the material composition of the containers themselves with 96% accuracy. Overall, VNIR spectroscopy presents a promising method to give household robots a general-purpose ability to infer the liquids inside of containers, without needing to open or manipulate the containers.

|

|

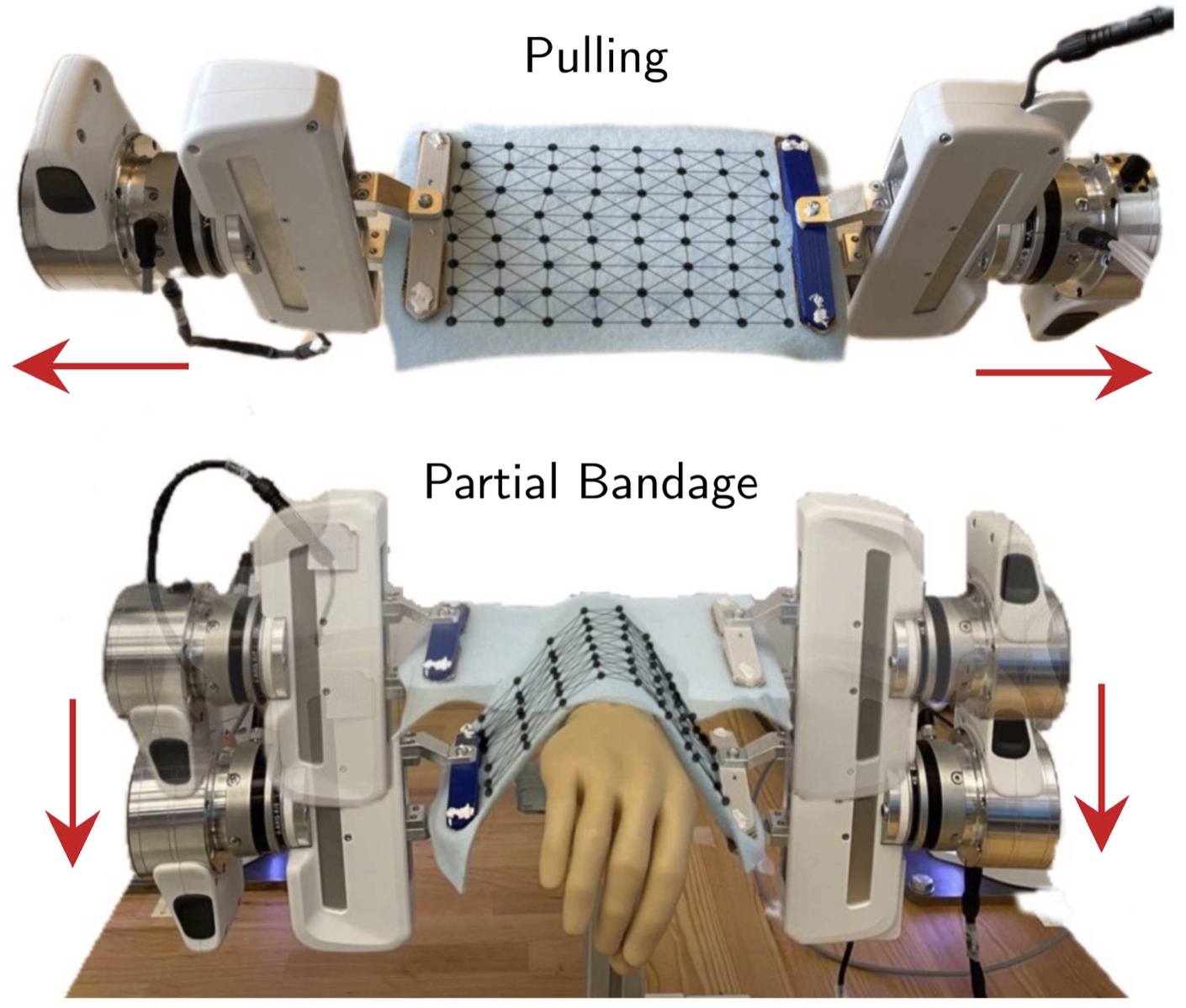

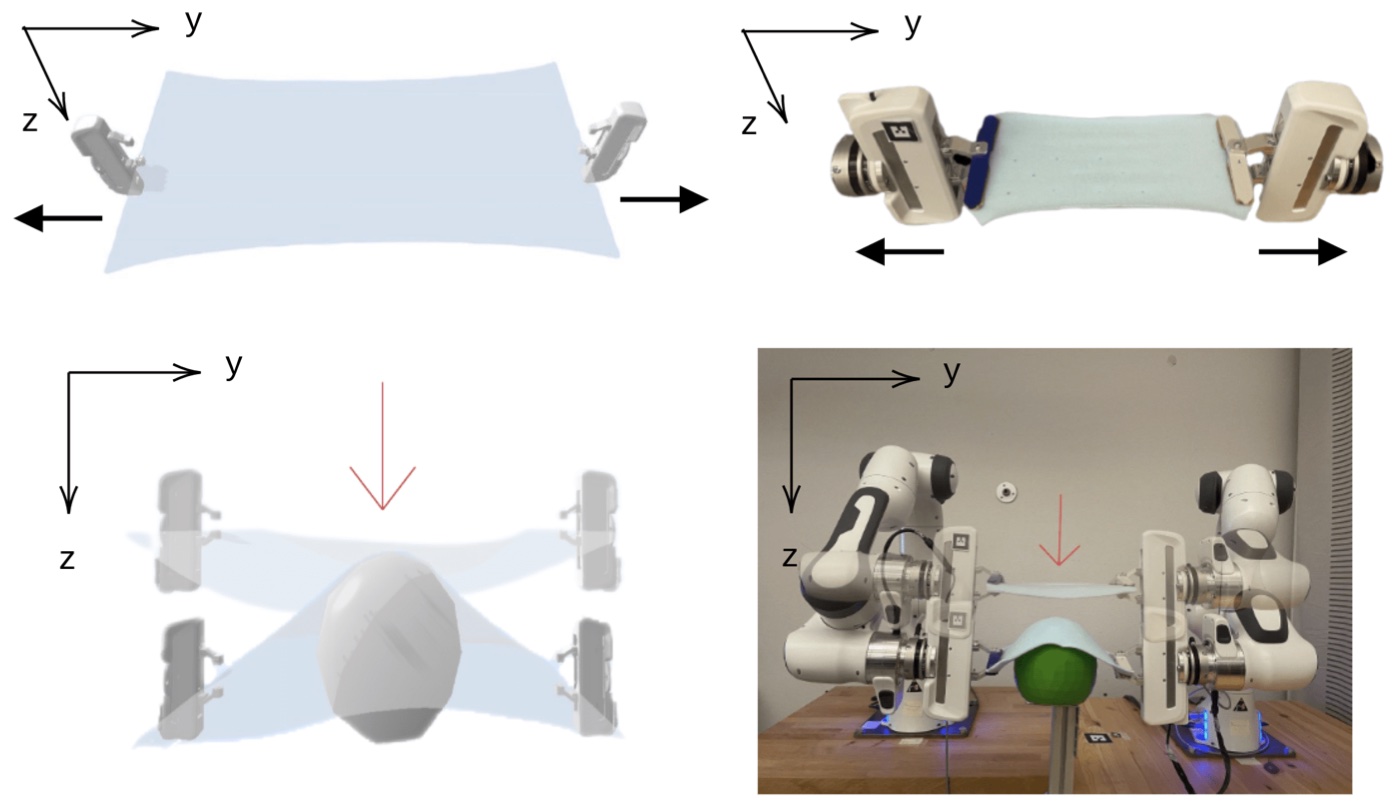

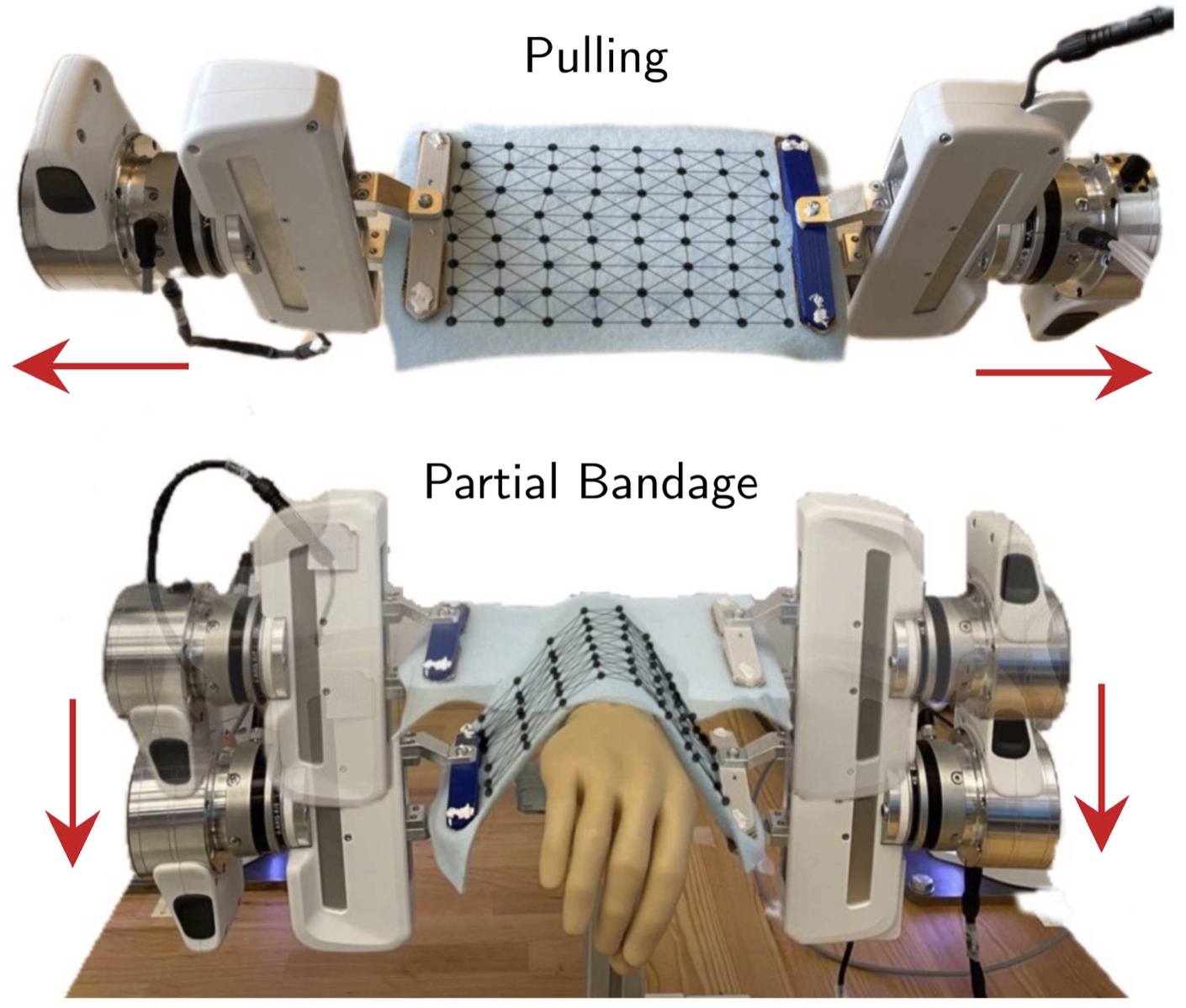

Alberta Longhini*,

Marco Moletta*,

Alfredo Reichlin,

Michael C. Welle,

David Held,

Zackory Erickson,

Danica Kragic

ICRA, 2023

[PDF]

[Bibtex]

[Abstract]

@inproceedings{longhini2023edonet,

title={EDO-Net: Learning Elastic Properties of Deformable Objects from Graph Dynamics},

author={Longhini, Alberta and Moletta, Marco and Reichlin, Alfredo and Welle, Michael C and Held, David and Erickson, Zackory and Kragic, Danica},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

year={2023},

organization={IEEE}

}

We study the problem of learning graph dynamics of deformable objects which generalize to unknown physical properties. In particular, we leverage a latent representation of elastic physical properties of cloth-like deformable objects which we explore through a pulling interaction. We propose EDO-Net (Elastic Deformable Object - Net), a model trained in a self-supervised fashion on a large variety of samples with different elastic properties. EDO-Net jointly learns an adaptation module, responsible for extracting a latent representation of the physical properties of the object, and a forward-dynamics module, which leverages the latent representation to predict future states of cloth-like objects, represented as graphs. We evaluate EDO-Net both in simulation and real world, assessing its capabilities of: 1) generalizing to unknown physical properties of cloth-like deformable objects, 2) transferring the learned representation to new downstream tasks.

|

|

Alberta Longhini,

Marco Moletta,

Alfredo Reichlin,

Michael C. Welle,

Alexander Kravberg,

Yufei Wang,

David Held,

Zackory Erickson,

Danica Kragic

ICRA, 2023

[PDF]

[Bibtex]

[Abstract]

@inproceedings{longhini2023elastic,

title={Elastic Context: Encoding Elasticity for Data-driven Models of Textiles},

author={Longhini, Alberta and Moletta, Marco and Reichlin, Alfredo and Welle, Michael C and Kravberg, Alexander and Wang, Yufei and Held, David and Erickson, Zackory and Kragic, Danica},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

year={2023},

organization={IEEE}

}

Physical interaction with textiles, such as assistive dressing scenarios, relies on advanced dexterous capabilities. The underlying complexity in textile behavior when being pulled and stretched is due to both the yarn material properties and the textile construction technique. Those are often not known a-priori, making it almost impossible to identify through sensing commonly available on robotic platforms. We introduce Elastic Context (EC) to encode elastic behaviors, which enables a more effective physical interaction with textiles. The definition of EC relies on stress/strain curves commonly used in textile engineering, which we reformulated for robotic applications. We employ EC using a GNN to learn generalized elastic behaviors of textiles. Furthermore, we explore the effect of the dimension of the EC on accurate force modeling of non-linear real-world elastic behaviors.

|

|

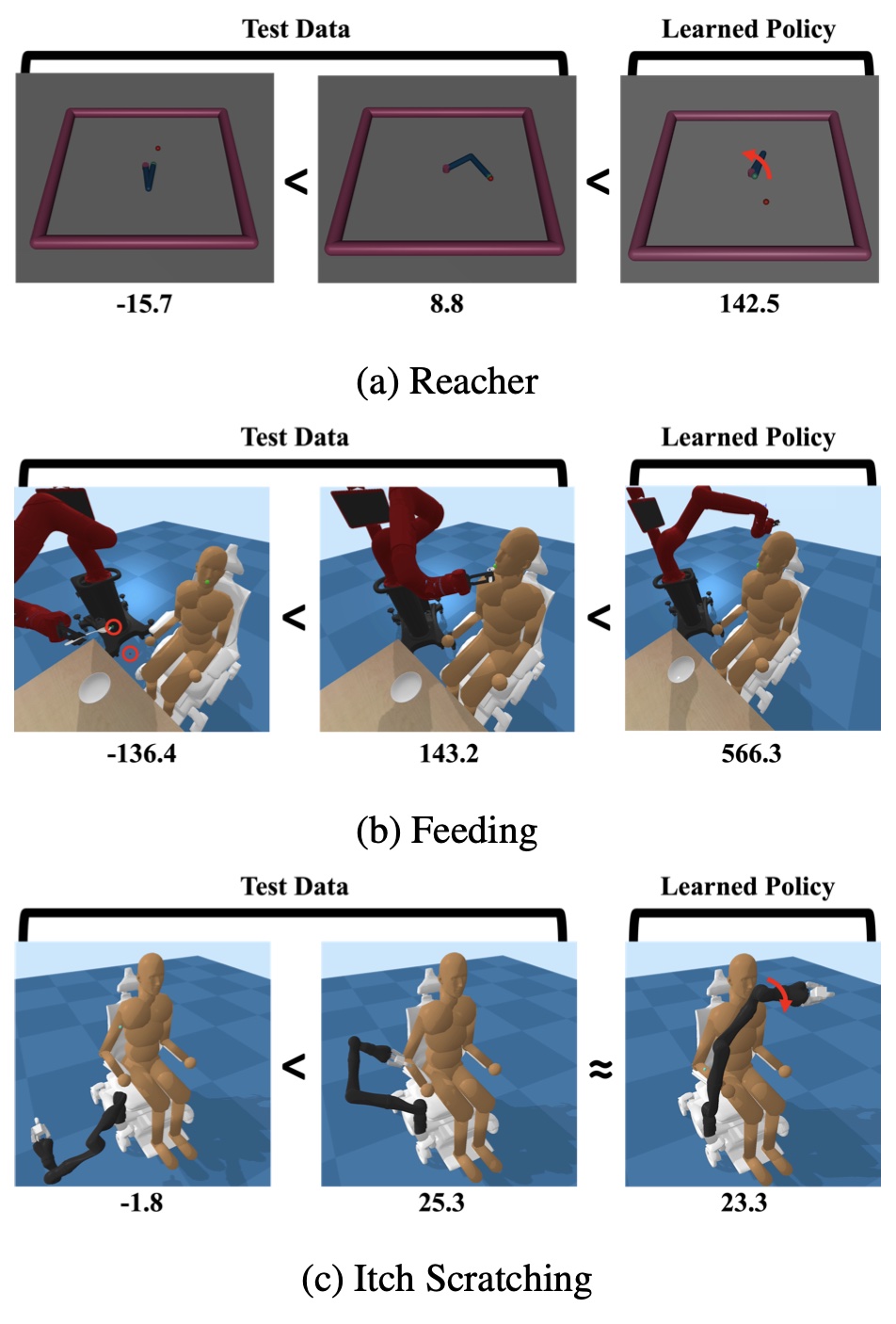

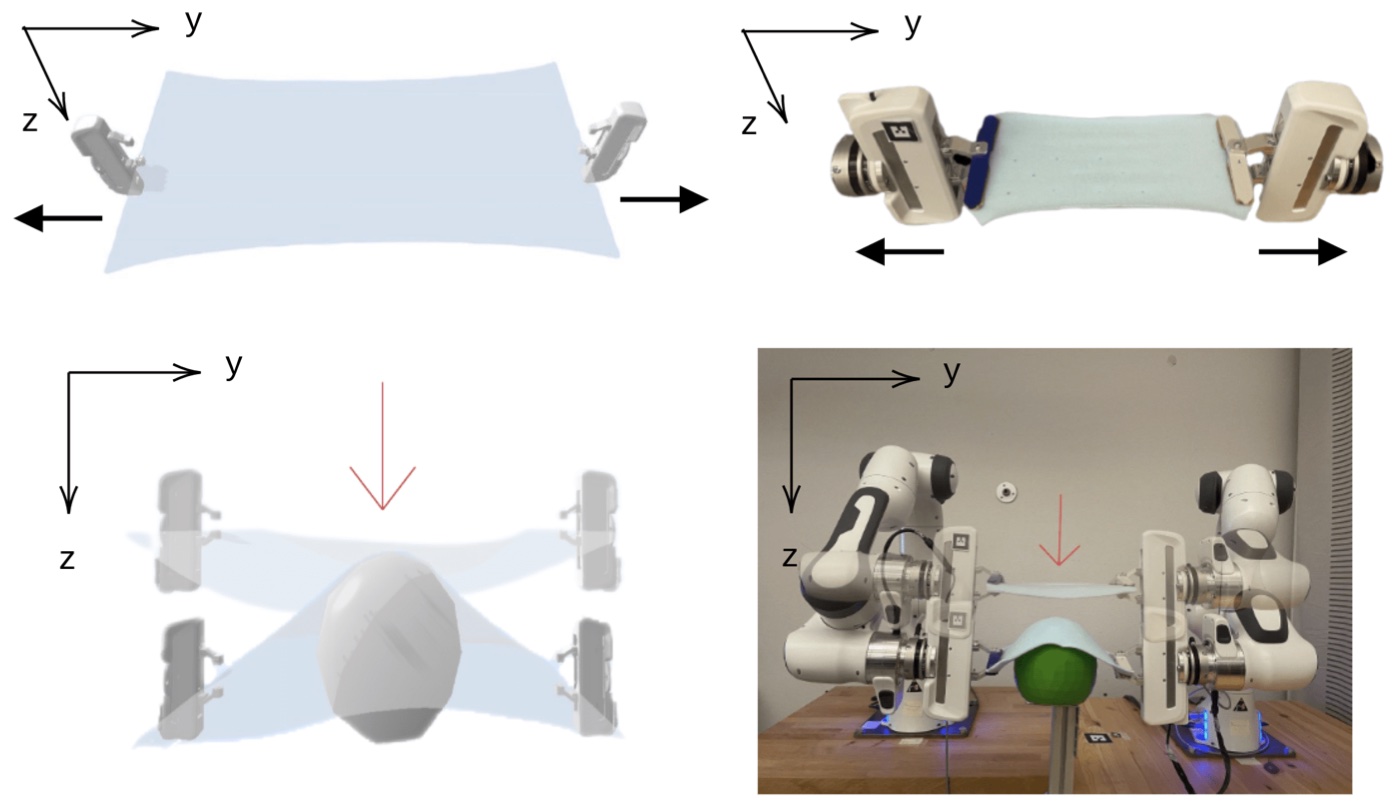

Jeremy Tien,

Jerry Zhi-Yang He,

Zackory Erickson,

Anca D. Dragan,

Daniel S. Brown

ICLR, 2023

[PDF]

[Project Page]

[Code]

[Bibtex]

[Abstract]

@inproceedings{tien2023causal,

title={A Study of Causal Confusion in Preference-Based Reward Learning},

author={Tien, Jeremy and He, Jerry Zhi-Yang and Erickson, Zackory and Dragan, Anca D and Brown, Daniel},

booktitle={International Conference on Learning Representations},

year={2023

}

Learning policies via preference-based reward learning is an increasingly popular method for customizing agent behavior, but has been shown anecdotally to be prone to spurious correlations and reward hacking behaviors. While much prior work focuses on causal confusion in reinforcement learning and behavioral cloning, we aim to study it in the context of reward learning. To study causal confusion, we perform a series of sensitivity and ablation analyses on three benchmark domains where rewards learned from preferences achieve minimal test error but fail to generalize to out-of-distribution states--resulting in poor policy performance when optimized. We find that the presence of non-causal distractor features, noise in the stated preferences, partial state observability, and larger model capacity can all exacerbate causal confusion. We also identify a set of methods with which to interpret causally confused learned rewards: we observe that optimizing causally confused rewards drives the policy off the reward's training distribution, resulting in high predicted (learned) rewards but low true rewards. These findings illuminate the susceptibility of reward learning to causal confusion, especially in high-dimensional environments—failure to consider even one of many factors (data coverage, state definition, etc.) can quickly result in unexpected, undesirable behavior.

|

|

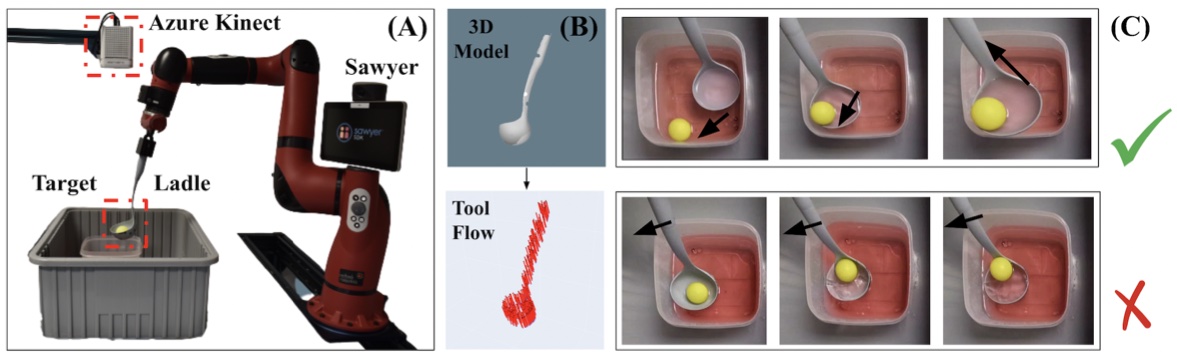

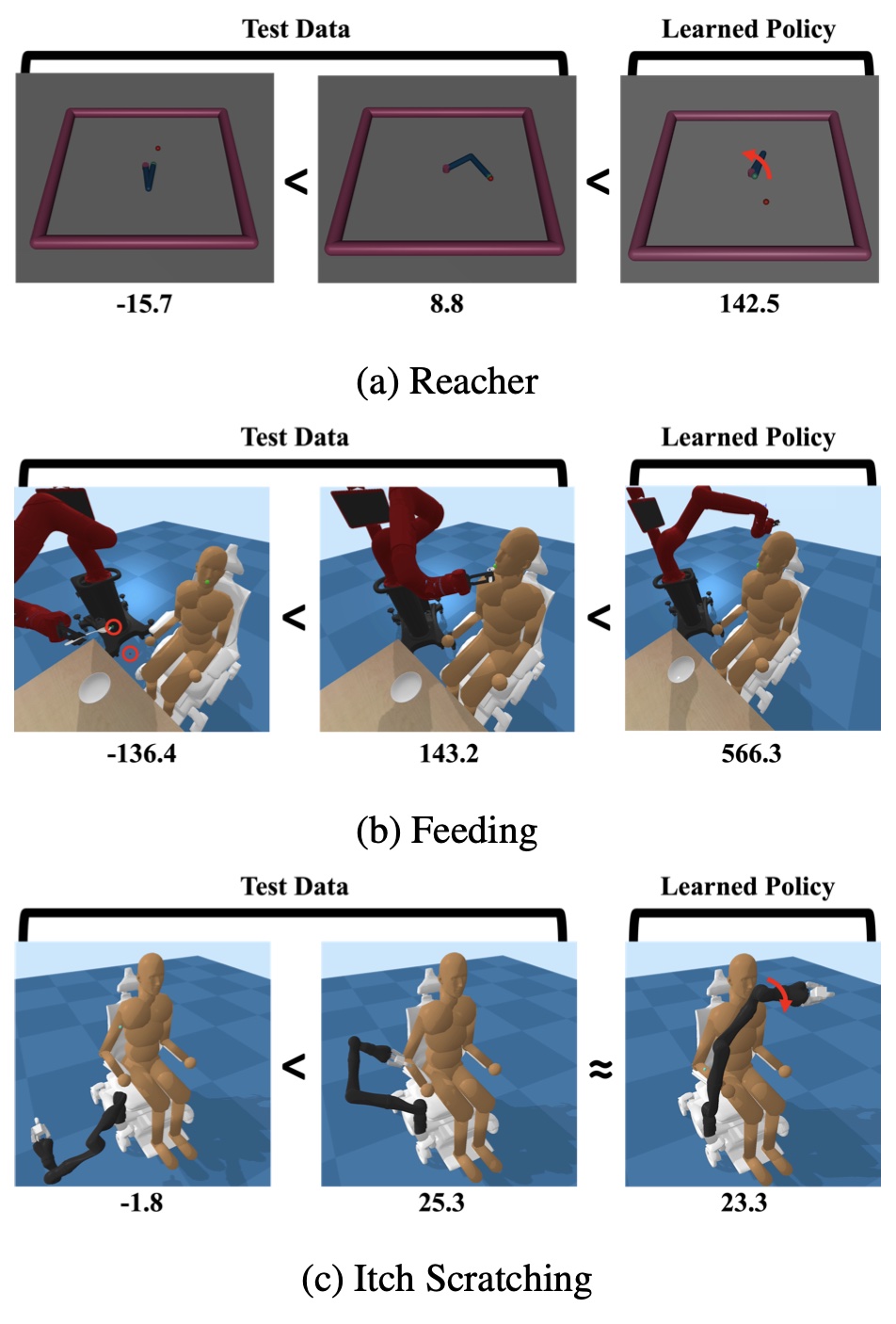

Daniel Seita,

Yufei Wang,

Edward Yao Li,

Sarthak J Shetty,

Zackory Erickson,

and David Held

CoRL, 2022

[PDF]

[Project Page]

[Bibtex]

[Abstract]

Point clouds are a widely available and canonical data modality which conveys the 3D geometry of a scene. Despite significant progress in classification and segmentation from point clouds, policy learning from such a modality remains challenging, and most prior works in imitation learning focus on learning policies from images or state information. In this paper, we propose a novel framework for learning policies from point clouds for robotic manipulation with tools. We use a novel neural network, ToolFlowNet, which predicts dense per-point flow on the tool that the robot controls, and then uses the flow to derive the transformation that the robot should execute. We apply this framework to imitation learning of challenging deformable object manipulation tasks with continuous movement of tools, including scooping and pouring, and demonstrate significantly improved performance over baselines which do not use flow. We perform physical scooping experiments with ToolFlowNet and find that we can attain 78% scooping success.

|

|

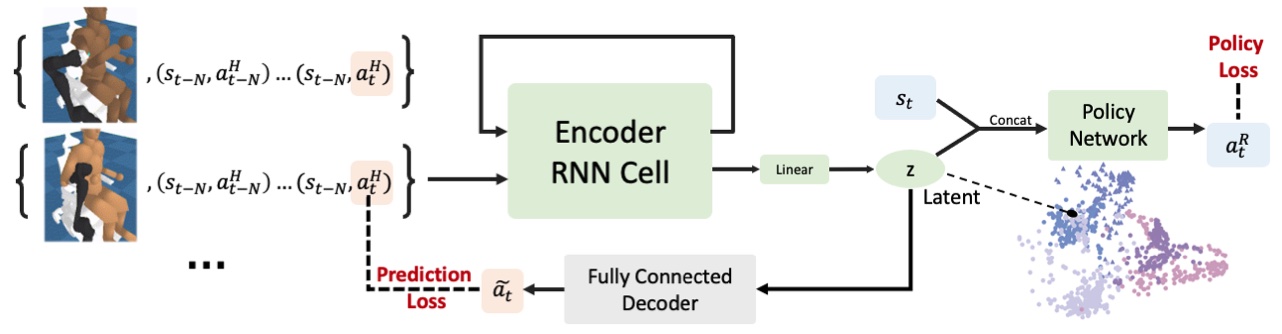

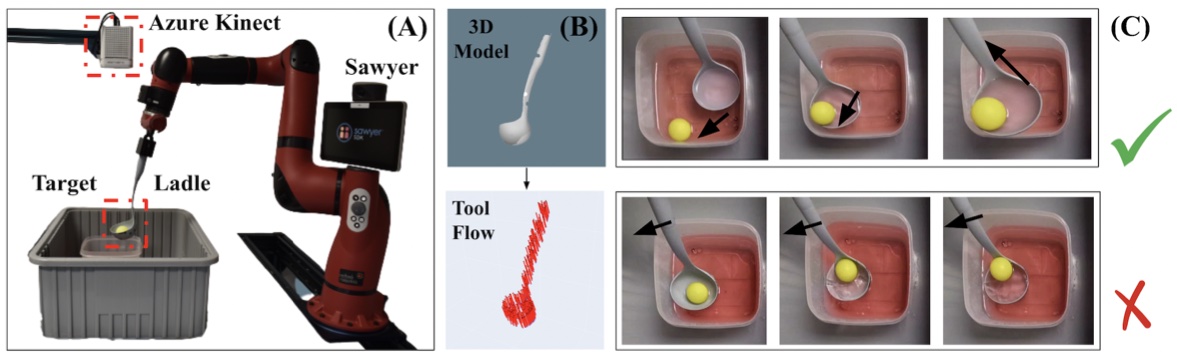

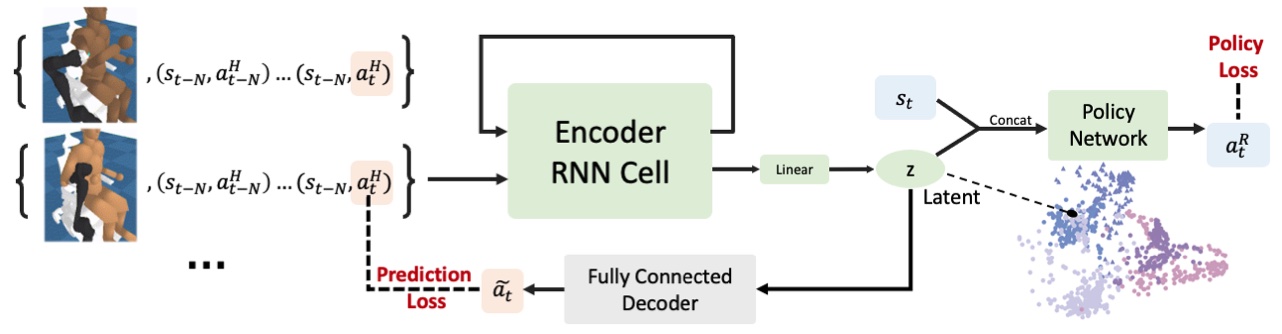

Jerry Zhi-Yang He,

Zackory Erickson,

Daniel S. Brown,

Aditi Raghunathan,

and Anca Dragan

CoRL, 2022

[PDF]

[Bibtex]

[Abstract]

@inproceedings{he2022learning,

title={Learning Representations that Enable Generalization in Assistive Tasks},

author={He, Jerry Zhi-Yang and Raghunathan, Aditi and Brown, Daniel S and Erickson, Zackory and Dragan, Anca D},

booktitle={6th Annual Conference on Robot Learning},

year={2022},

url={https://openreview.net/forum?id=b88HF4vd_ej}

}

Recent work in sim2real has successfully enabled robots to act in physical environments by training in simulation with a diverse ``population'' of environments (i.e. domain randomization). In this work, we focus on enabling generalization in assistive tasks: tasks in which the robot is acting to assist a user (e.g. helping someone with motor impairments with bathing or with scratching an itch). Such tasks are particularly interesting relative to prior sim2real successes because the environment now contains a human who is also acting. This complicates the problem because the diversity of human users (instead of merely physical environment parameters) is more difficult to capture in a population, thus increasing the likelihood of encountering out-of-distribution (OOD) human policies at test time. We advocate that generalization to such OOD policies benefits from (1) learning a good latent representation for human policies that test-time humans can accurately be mapped to, and (2) making that representation adaptable with test-time interaction data, instead of relying on it to perfectly capture the space of human policies based on the simulated population only. We study how to best learn such a representation by evaluating on purposefully constructed OOD test policies. We find that sim2real methods that encode environment (or population) parameters and work well in tasks that robots do in isolation, do not work well in assistance. In assistance, it seems crucial to train the representation based on the history of interaction directly, because that is what the robot will have access to at test time. Further, training these representations to then predict human actions not only gives them better structure, but also enables them to be fine-tuned at test-time, when the robot observes the partner act.

|

|

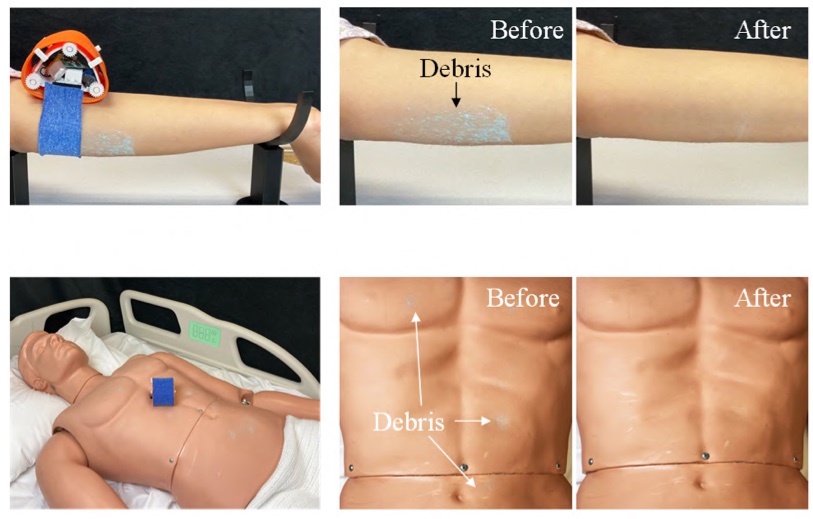

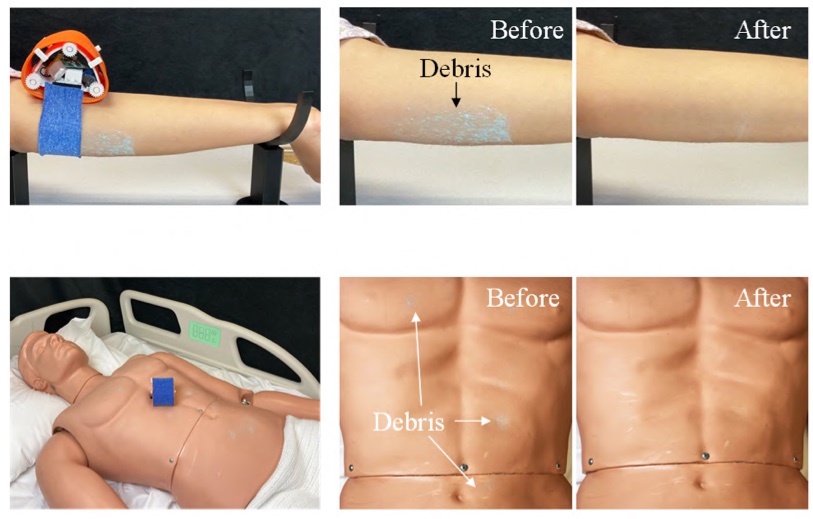

Fukang Liu,

Vaidehi Patil,

Zackory Erickson,

and Zeynep Temel

ROBIO, 2022

[PDF]

[Bibtex]

[Abstract]

@inproceedings{liu2022characterization,

title={Characterization of a Meso-Scale Wearable Robot for Bathing Assistance},

author={Liu, Fukang and Patil, Vaidehi and Erickson, Zackory and Temel, Zeynep},

booktitle={2022 IEEE International Conference on Robotics and Biomimetics (ROBIO)},

pages={2146--2152},

year={2022},

organization={IEEE}

}

Robotic bathing assistance has long been considered an important and practical task in healthcare. Yet, achieving flexible and efficient cleaning tasks on the human body is challenging, since washing the body involves direct human-robot physical contact and simple, safe, and effective devices are needed for bathing and hygiene. In this paper, we present a meso-scale wearable robot that can locomote along the human body to provide bathing and skin care assistance. We evaluated the cleaning performance of the robot system under different scenarios. The experiments on the pipe show that the robot can achieve cleaning percentage over 92% with two types of stretchable fabrics. The robot removed most of the debris with average values of 94% on a human arm and 93% on a manikin torso. The results demonstrate that the robot exhibits high performance in cleaning tasks.

|

|

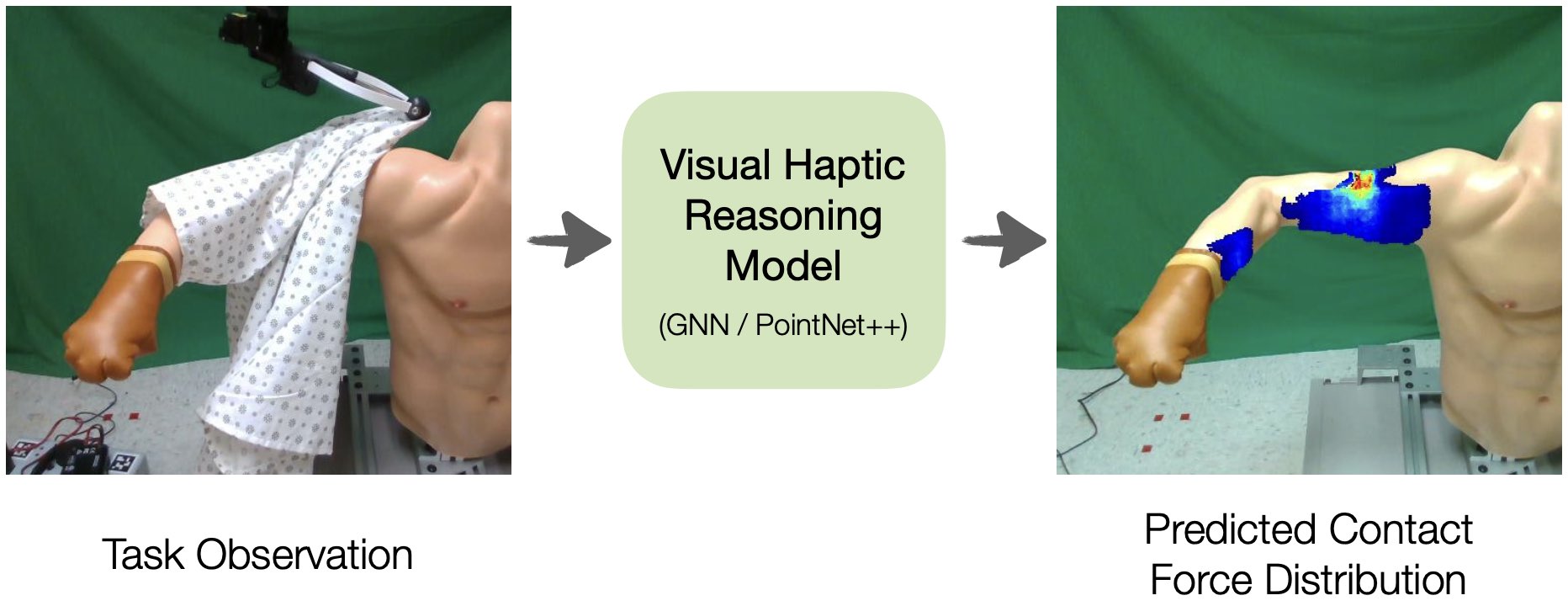

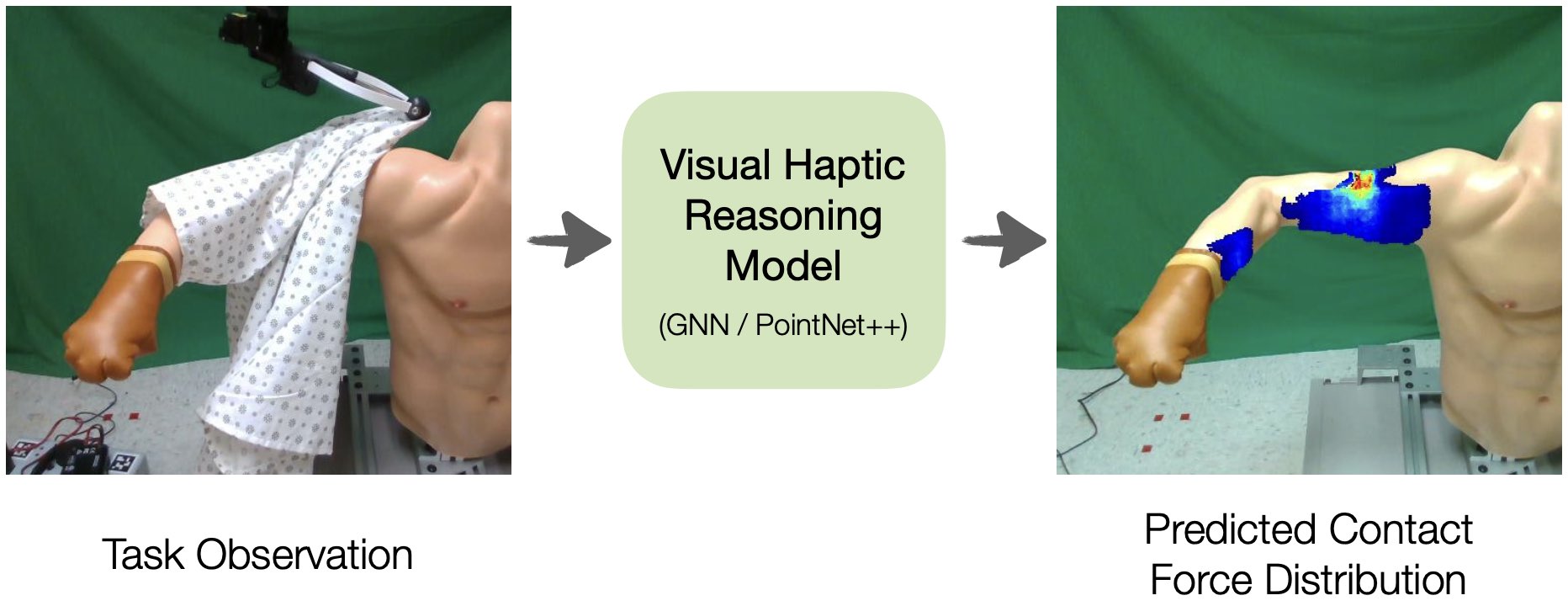

Yufei Wang,

David Held,

and Zackory Erickson

IEEE Robotics and Automation Letters (RA-L), 2022

[PDF]

[Project Page]

[Bibtex]

[Abstract]

@article{wang2022visual,

title={Visual Haptic Reasoning: Estimating Contact Forces by Observing Deformable Object Interactions},

author={Wang, Yufei and Held, David and Erickson, Zackory},

journal={IEEE Robotics and Automation Letters},

year={2022},

organization={IEEE}

}

Robotic manipulation of highly deformable cloth presents a promising opportunity to assist people with several daily tasks, such as washing dishes; folding laundry; or dressing, bathing, and hygiene assistance for individuals with severe motor impairments. In this work, we introduce a formulation that enables a collaborative robot to perform visual haptic reasoning with cloth---the act of inferring the location and magnitude of applied forces during physical interaction. We present two distinct model representations, trained in physics simulation, that enable haptic reasoning using only visual and robot kinematic observations. We conducted quantitative evaluations of these models in simulation for robot-assisted dressing, bathing, and dish washing tasks, and demonstrate that the trained models can generalize across different tasks with varying interactions, human body sizes, and object shapes. We also present results with a real-world mobile manipulator, which used our simulation-trained models to estimate applied contact forces while performing physically assistive tasks with cloth. Videos can be found at our project webpage.

|

|

Christian Schöffmann,

Zackory Erickson,

and Hubert Zangl

IEEE Robotics and Automation Letters (RA-L), 2022

[PDF]

[Code]

[Bibtex]

[Abstract]

@article{schoffmann2022capsense,

author={Schöffmann, Christian and Erickson, Zackory and Zangl, Hubert},

journal={IEEE Robotics and Automation Letters},

title={CapSense: A Real-Time Capacitive Sensor Simulation Framework for Physical Human-Robot Interaction},

year={2022},

volume={7},

number={4},

pages={9929-9936},

doi={10.1109/LRA.2022.3191942}

}

This article presents CapSense, a real-time open-source capacitive sensor simulation framework for robotic applications. CapSense provides raw data of capacitive proximity sensors based on a fast and efficient 3D finite-element method (FEM) implementation. The proposed framework is interfaced to off-the-shelf robot and physics simulation environments to couple dynamic interaction of the environment with an electro-static solver for capacitance computation in real-time. The FEM method proposed in this article relies on a static tetrahedral mesh of the sensor surrounding without a-posteriori re-meshing and achieves high update rates by an adaptive update step. CapSense is flexible due to various configuration parameters (i.e. number, size, shape and location of electrodes) and serves as a platform for investigation of capacitive sensors in robotic applications. By using the proposed framework, researchers can simulate capacitive sensors in different scenarios and investigate these sensors and their configuration prior to installation and fabrication of real hardware. The proposed framework opens new research opportunities via sim-to-real transfer of capacitive sensing. The simulation approach is validated by comparing real-world results of different scenarios with simulation results. In order to showcase the benefits of CapSense in physical Human-Robot Interaction (pHRI), the framework is evaluated in a robotic healthcare scenario.

|

|

Kavya Puthuveetil,

Charles C. Kemp,

and Zackory Erickson

IEEE Robotics and Automation Letters (RA-L), 2022

[PDF]

[Project Page]

[Code]

[Bibtex]

[Abstract]

[Video]

@article{puthuveetil2022bodies,

author={Puthuveetil, Kavya and Kemp, Charles C. and Erickson, Zackory},

journal={IEEE Robotics and Automation Letters},

title={Bodies Uncovered: Learning to Manipulate Real Blankets Around People via Physics Simulations},

year={2022},

volume={7},

number={2},

pages={1984-1991},

doi={10.1109/LRA.2022.3142732}

}

While robots present an opportunity to provide physical assistance to older adults and people with mobility impairments in bed, people frequently rest in bed with blankets that cover the majority of their body. To provide assistance for many daily self-care tasks, such as bathing, dressing, or ambulating, a caregiver must first uncover blankets from part of a person's body. In this work, we introduce a formulation for robotic bedding manipulation around people in which a robot uncovers a blanket from a target body part while ensuring the rest of the human body remains covered. We compare two approaches for optimizing policies which provide a robot with grasp and release points that uncover a target part of the body: 1) reinforcement learning and 2) self-supervised learning with optimization to generate training data. We trained and conducted evaluations of these policies in physics simulation environments that consist of a deformable cloth mesh covering a simulated human lying supine on a bed. In addition, we transfer simulation-trained policies to a real mobile manipulator and demonstrate that it can uncover a blanket from target body parts of a manikin lying in bed. Source code is available online.

|

|

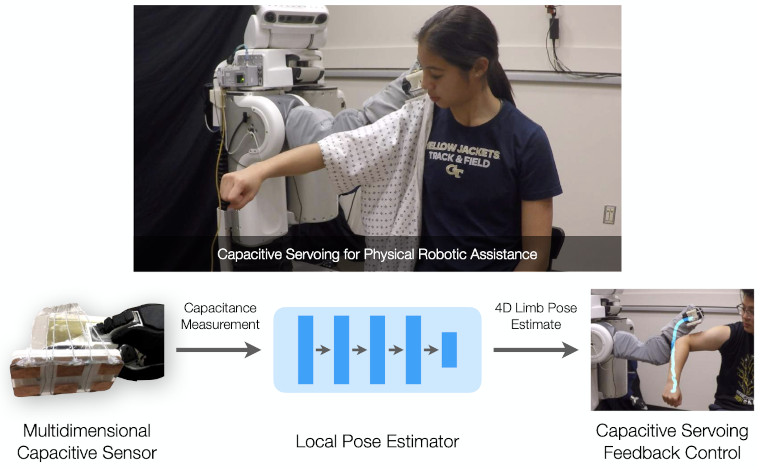

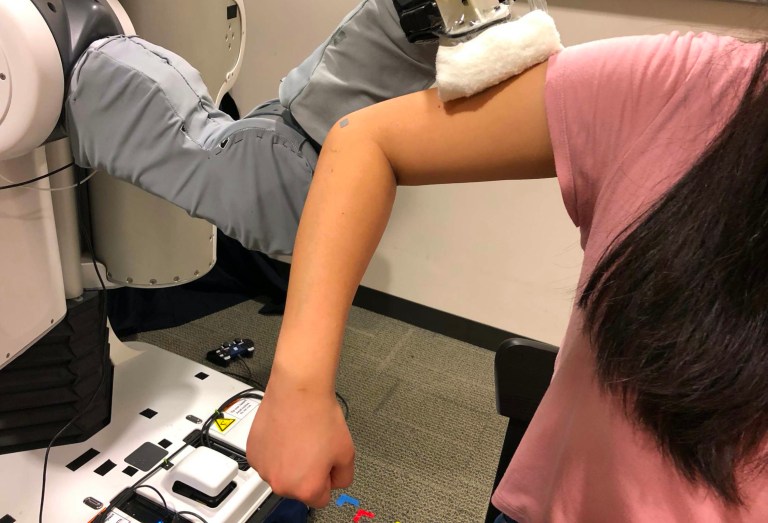

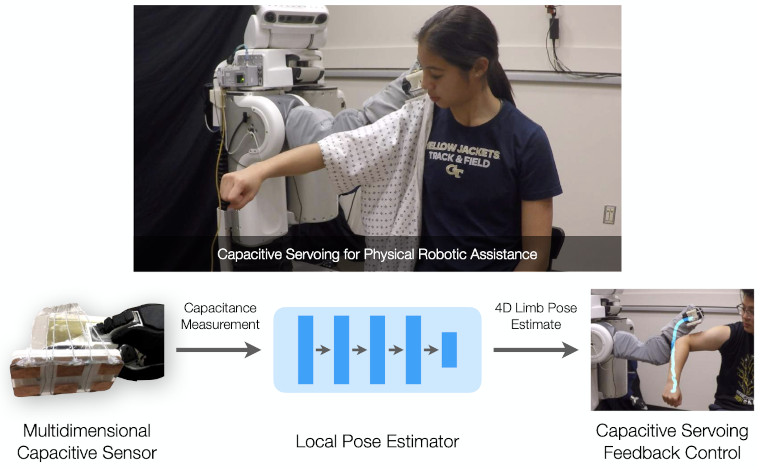

Zackory Erickson,

Henry M. Clever,

Vamsee Gangaram,

Eliot Xing,

Greg Turk,

C. Karen Liu,

and Charles C. Kemp

IEEE Transactions on Robotics (T-RO), 2022

[PDF]

[Bibtex]

[Abstract]

@article{erickson2022characterizing,

title={Characterizing Multidimensional Capacitive Servoing for Physical Human-Robot Interaction},

author={Erickson, Zackory and Clever, Henry M and Gangaram, Vamsee and Xing, Eliot and Turk, Greg and Liu, C Karen and Kemp, Charles C},

journal={IEEE Transactions on Robotics (T-RO)},

year={2022}

}

Towards the goal of robots performing robust and intelligent physical interactions with people, it is crucial that robots are able to accurately sense the human body, follow trajectories around the body, and track human motion. This study introduces a capacitive servoing control scheme that allows a robot to sense and navigate around human limbs during close physical interactions. Capacitive servoing leverages temporal measurements from a multi-electrode capacitive sensor array mounted on a robot's end effector to estimate the relative position and orientation (pose) of a nearby human limb. Capacitive servoing then uses these human pose estimates from a data-driven pose estimator within a feedback control loop in order to maneuver the robot's end effector around the surface of a human limb. We provide a design overview of capacitive sensors for human-robot interaction and then investigate the performance and generalization of capacitive servoing through an experiment with 12 human participants. The results indicate that multidimensional capacitive servoing enables a robot's end effector to move proximally or distally along human limbs while adapting to human pose. Using a cross-validation experiment, results further show that capacitive servoing generalizes well across people with different body size.

|

|

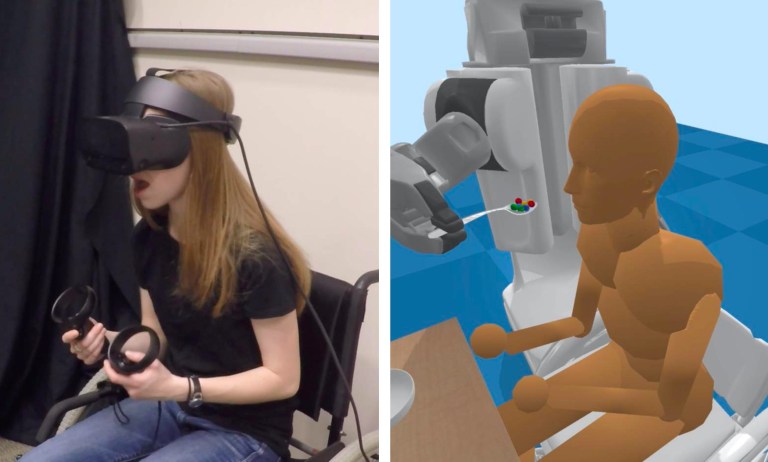

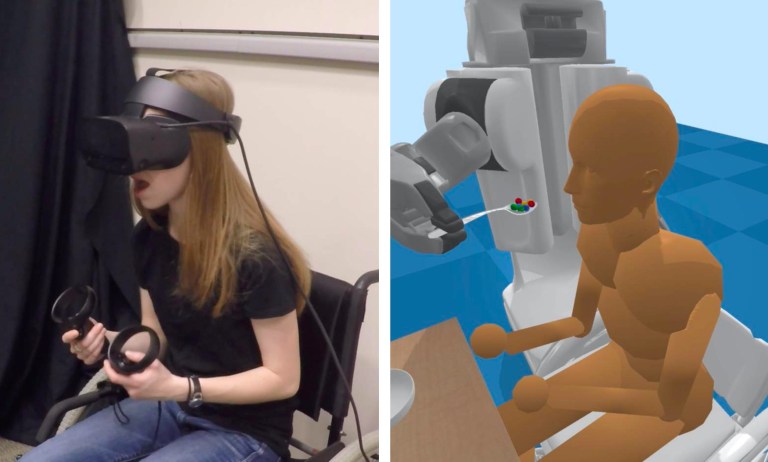

Zackory Erickson*,

Yijun Gu*,

and Charles C. Kemp

RO-MAN, 2020

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@inproceedings{erickson2020assistivevr,

title={Assistive VR Gym: Interactions with Real People to Improve Virtual Assistive Robots},

author={Erickson, Zackory and Gu, Yijun and Kemp, Charles C},

booktitle={2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)},

pages={299--306},

organization={IEEE}

}

Versatile robotic caregivers could benefit millions of people worldwide, including older adults and people with disabilities. Recent work has explored how robotic caregivers can learn to interact with people through physics simulations, yet transferring what has been learned to real robots remains challenging. Virtual reality (VR) has the potential to help bridge the gap between simulations and the real world. We present Assistive VR Gym (AVR Gym), which enables real people to interact with virtual assistive robots. We also provide evidence that AVR Gym can help researchers improve the performance of simulation-trained assistive robots with real people. Prior to AVR Gym, we trained robot control policies (Original Policies) solely in simulation for four robotic caregiving tasks (robot-assisted feeding, drinking, itch scratching, and bed bathing) with two simulated robots (PR2 from Willow Garage and Jaco from Kinova). With AVR Gym, we developed Revised Policies based on insights gained from testing the Original policies with real people. Through a formal study with eight participants in AVR Gym, we found that the Original policies performed poorly, the Revised policies performed significantly better, and that improvements to the biomechanical models used to train the Revised policies resulted in simulated people that better match real participants. Notably, participants significantly disagreed that the Original policies were successful at assistance, but significantly agreed that the Revised policies were successful at assistance. Overall, our results suggest that VR can be used to improve the performance of simulation-trained control policies with real people without putting people at risk, thereby serving as a valuable stepping stone to real robotic assistance.

|

|

Zackory Erickson,

Eliot Xing,

Bharat Srirangam,

Sonia Chernova,

and Charles C. Kemp

IROS, 2020

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@inproceedings{erickson2020multimodal,

title={Multimodal material classification for robots using spectroscopy and high resolution texture imaging},

author={Erickson, Zackory and Xing, Eliot and Srirangam, Bharat and Chernova, Sonia and Kemp, Charles C},

booktitle={2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={10452--10459},

organization={IEEE}

}

Material recognition can help inform robots about how to properly interact with and manipulate real-world objects. In this paper, we present a multimodal sensing technique, leveraging near-infrared spectroscopy and close-range high resolution texture imaging, that enables robots to estimate the materials of household objects. We release a dataset of high resolution texture images and spectral measurements collected from a mobile manipulator that interacted with 144 household objects. We then present a neural network architecture that learns a compact multimodal representation of spectral measurements and texture images. When generalizing material classification to new objects, we show that this multimodal representation enables a robot to recognize materials with greater performance as compared to prior state-of-the-art approaches. Finally, we present how a robot can combine this high resolution local sensing with images from the robot's head-mounted camera to achieve accurate material classification over a scene of objects on a table.

|

|

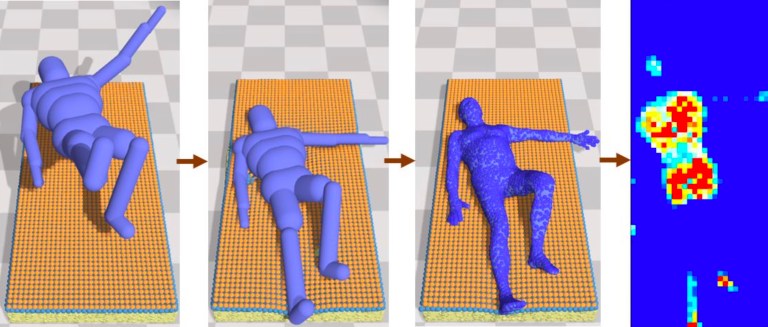

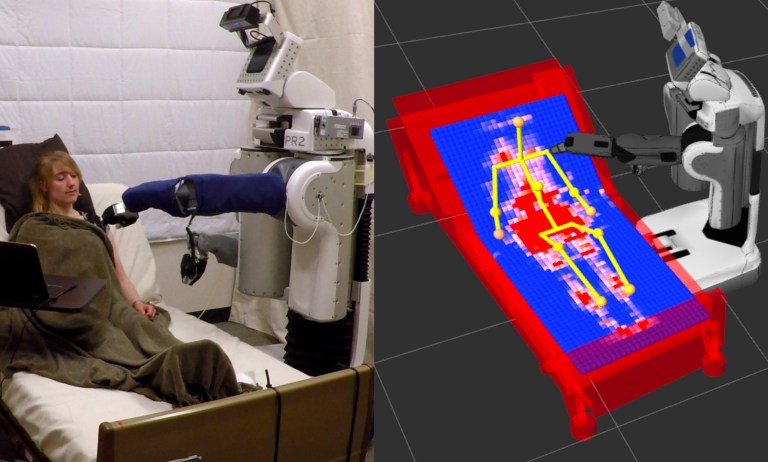

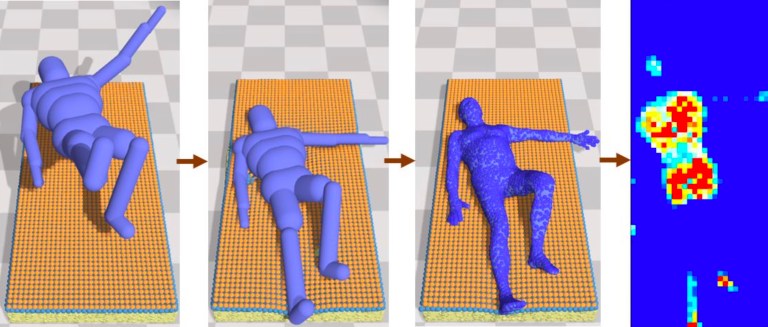

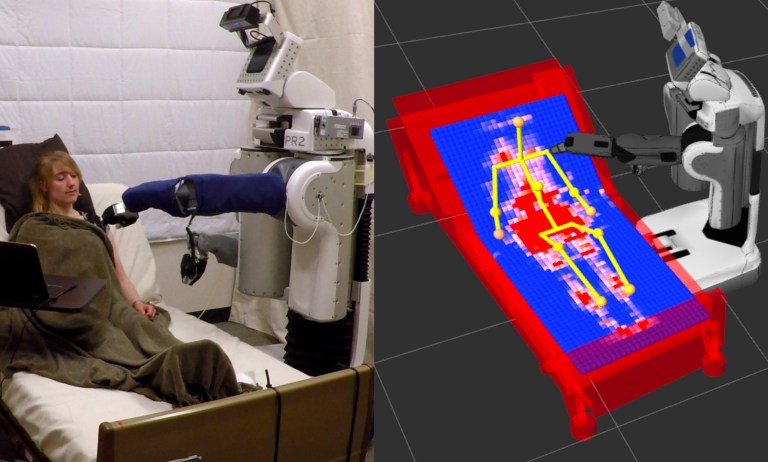

Henry M. Clever,

Zackory Erickson,

Ariel Kapusta,

Greg Turk,

C. Karen Liu,

and Charles C. Kemp

CVPR, 2020

(Accepted for Oral Presentation)

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@inproceedings{clever2020bodies,

title={Bodies at rest: 3d human pose and shape estimation from a pressure image using synthetic data},

author={Clever, Henry M and Erickson, Zackory and Kapusta, Ariel and Turk, Greg and Liu, Karen and Kemp, Charles C},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={6215--6224},

year={2020}

}

People spend a substantial part of their lives at rest in bed. 3D human pose and shape estimation for this activity would have numerous beneficial applications, yet line-of-sight perception is complicated by occlusion from bedding. Pressure sensing mats are a promising alternative, but training data is challenging to collect at scale. We describe a physics-based method that simulates human bodies at rest in a bed with a pressure sensing mat, and present PressurePose, a synthetic dataset with 206K pressure images with 3D human poses and shapes. We also present PressureNet, a deep learning model that estimates human pose and shape given a pressure image and gender. PressureNet incorporates a pressure map reconstruction (PMR) network that models pressure image generation to promote consistency between estimated 3D body models and pressure image input. In our evaluations, PressureNet performed well with real data from participants in diverse poses, even though it had only been trained with synthetic data. When we ablated the PMR network, performance dropped substantially.

|

|

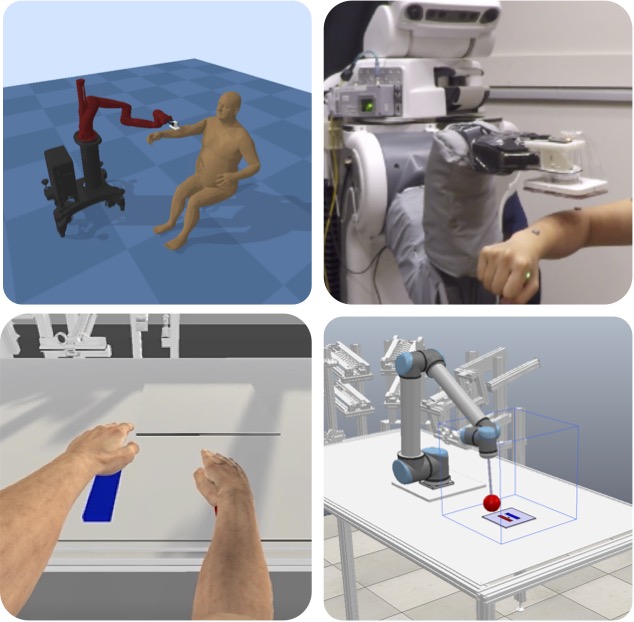

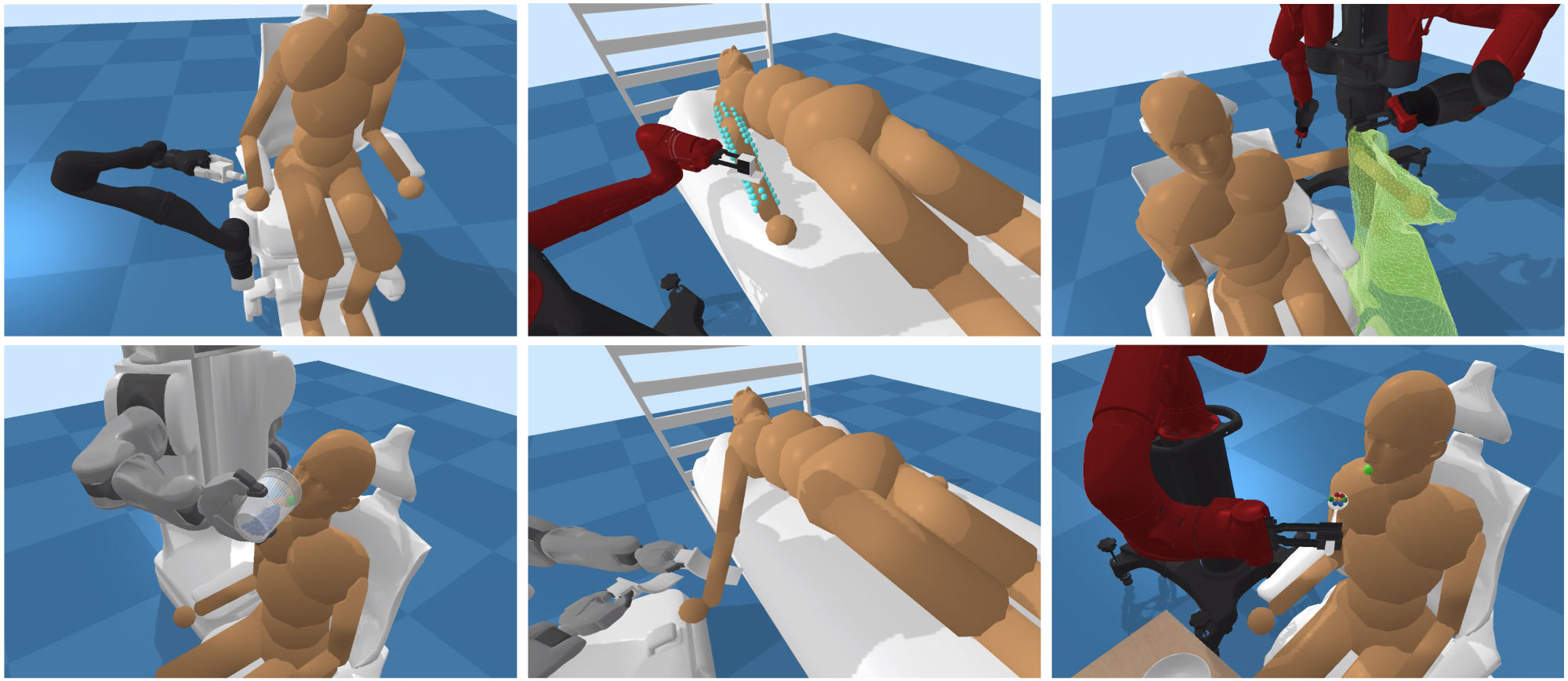

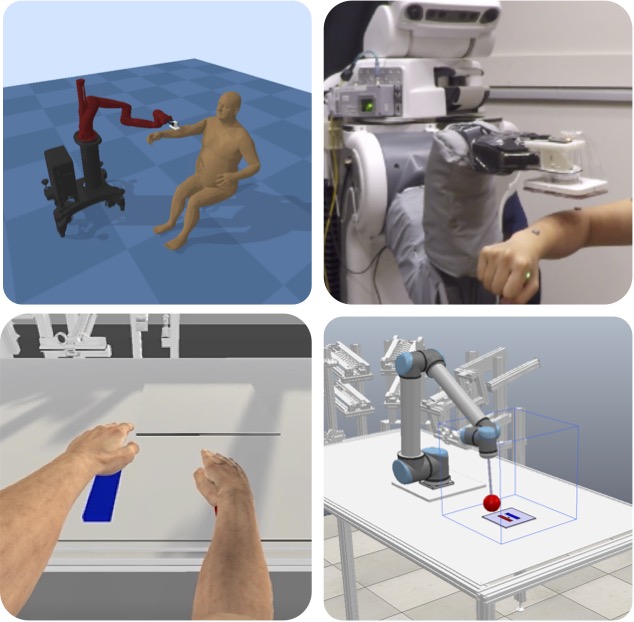

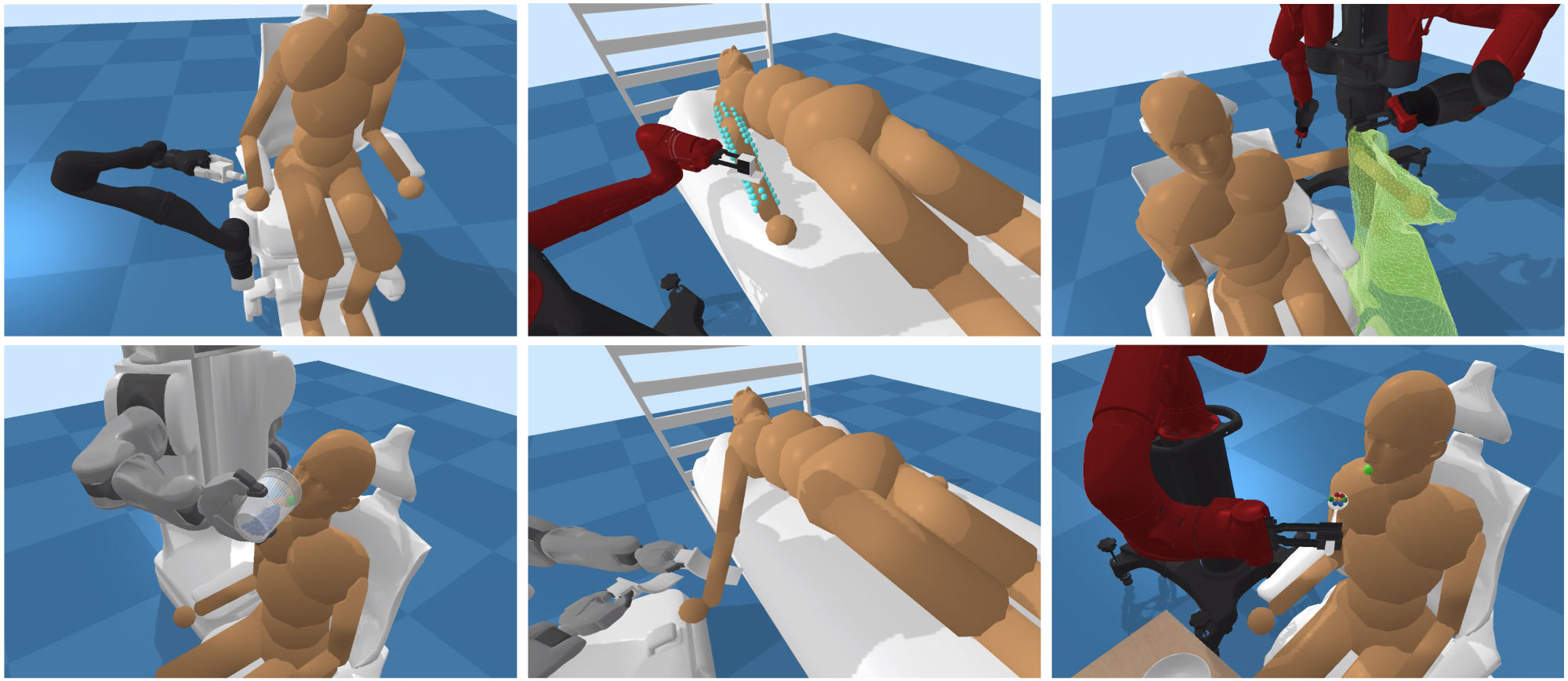

Zackory Erickson,

Vamsee Gangaram,

Ariel Kapusta,

C. Karen Liu,

and Charles C. Kemp

ICRA, 2020

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@inproceedings{erickson2020assistive,

title={Assistive gym: A physics simulation framework for assistive robotics},

author={Erickson, Zackory and Gangaram, Vamsee and Kapusta, Ariel and Liu, C Karen and Kemp, Charles C},

booktitle={2020 IEEE International Conference on Robotics and Automation (ICRA)},

pages={10169--10176},

year={2020},

organization={IEEE}

}

Autonomous robots have the potential to serve as versatile caregivers that improve quality of life for millions of people worldwide. Yet, conducting research in this area presents numerous challenges, including the risks of physical interaction between people and robots. Physics simulations have been used to optimize and train robots for physical assistance, but have typically focused on a single task. In this paper, we present Assistive Gym, an open source physics simulation framework for assistive robots that models multiple tasks. It includes six simulated environments in which a robotic manipulator can attempt to assist a person with activities of daily living (ADLs): itch scratching, drinking, feeding, body manipulation, dressing, and bathing. Assistive Gym models a person's physical capabilities and preferences for assistance, which are used to provide a reward function. We present baseline policies trained using reinforcement learning for four different commercial robots in the six environments. We demonstrate that modeling human motion results in better assistance and we compare the performance of different robots. Overall, we show that Assistive Gym is a promising tool for assistive robotics research.

|

|

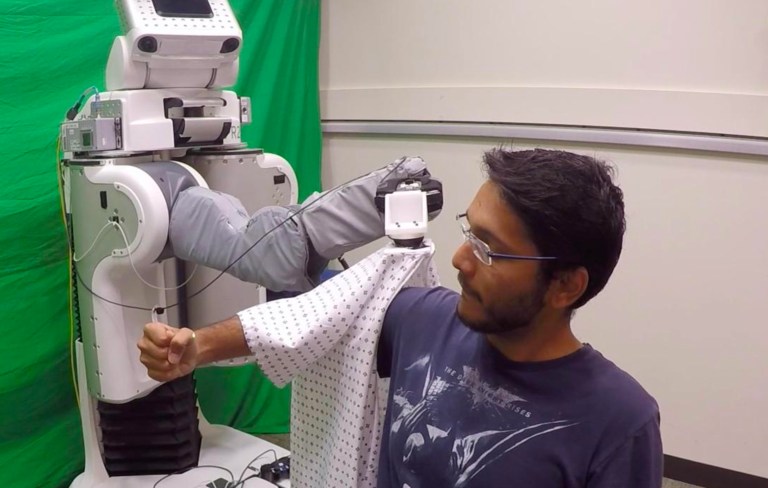

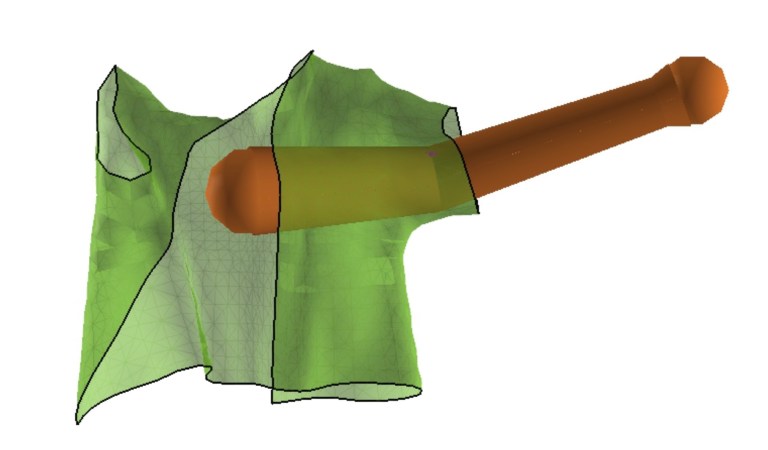

Alexander Clegg,

Zackory Erickson,

Patrick Grady,

Greg Turk,

Charles C. Kemp,

and C. Karen Liu

RA-L, 2020

[PDF]

[Bibtex]

[Abstract]

[Video]

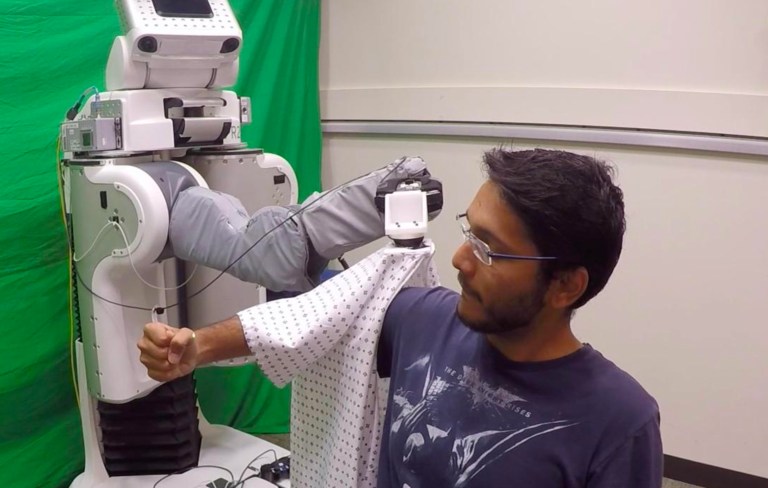

@article{clegg2020learning,

title={Learning to collaborate from simulation for robot-assisted dressing},

author={Clegg, Alexander and Erickson, Zackory and Grady, Patrick and Turk, Greg and Kemp, Charles C and Liu, C Karen},

journal={IEEE Robotics and Automation Letters},

volume={5},

number={2},

pages={2746--2753},

year={2020},

publisher={IEEE}

}

We investigated the application of haptic feedback control and deep reinforcement learning (DRL) to robot-assisted dressing. Our method uses DRL to simultaneously train human and robot control policies as separate neural networks using physics simulations. In addition, we modeled variations in human impairments relevant to dressing, including unilateral muscle weakness, involuntary arm motion, and limited range of motion. Our approach resulted in control policies that successfully collaborate in a variety of simulated dressing tasks involving a hospital gown and a T-shirt. In addition, our approach resulted in policies trained in simulation that enabled a real PR2 robot to dress the arm of a humanoid robot with a hospital gown. We found that training policies for specific impairments dramatically improved performance; that controller execution speed could be scaled after training to reduce the robot's speed without steep reductions in performance; that curriculum learning could be used to lower applied forces; and that multi-modal sensing, including a simulated capacitive sensor, improved performance.

|

|

Daehyung Park,

Yuuna Hoshi,

Harshal P. Mahajan,

Ho Keun Kim,

Zackory Erickson,

Wendy A. Rogers,

and Charles C. Kemp

Robotics and Autonomous Systems, 2020

[PDF]

[Bibtex]

[Abstract]

[Video]

@article{park2020active,

title={Active robot-assisted feeding with a general-purpose mobile manipulator: Design, evaluation, and lessons learned},

author={Park, Daehyung and Hoshi, Yuuna and Mahajan, Harshal P and Kim, Ho Keun and Erickson, Zackory and Rogers, Wendy A and Kemp, Charles C},

journal={Robotics and Autonomous Systems},

volume={124},

pages={103344},

year={2020},

publisher={Elsevier}

}

Eating is an essential activity of daily living (ADL) for staying healthy and living at home independently. Although numerous assistive devices have been introduced, many people with disabilities are still restricted from independent eating due to the devices' physical or perceptual limitations. In this work, we present a new meal-assistance system and evaluations of this system with people with motor impairments. We also discuss learned lessons and design insights based on the evaluations. The meal-assistance system uses a general-purpose mobile manipulator, a Willow Garage PR2, which has the potential to serve as a versatile form of assistive technology. Our active feeding framework enables the robot to autonomously deliver food to the user's mouth, reducing the need for head movement by the user. The user interface, visually-guided behaviors, and safety tools allow people with severe motor impairments to successfully use the system. We evaluated our system with a total of 10 able-bodied participants and 9 participants with motor impairments. Both groups of participants successfully ate various foods using the system and reported high rates of success for the system's autonomous behaviors. In general, participants who operated the system reported that it was comfortable, safe, and easy-to-use.

|

|

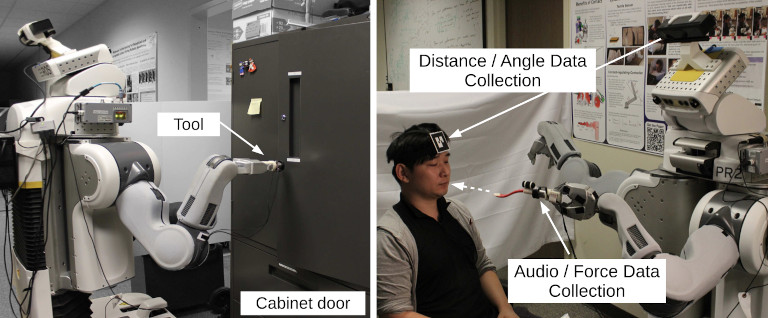

Zackory Erickson,

Henry M. Clever,

Vamsee Gangaram,

Greg Turk,

C. Karen Liu,

and Charles C. Kemp

ICORR, 2019

(Best Student Paper Award)

[PDF]

[Bibtex]

[Abstract]

[Video]

@inproceedings{erickson2019multidimensional,

title={Multidimensional capacitive sensing for robot-assisted dressing and bathing},

author={Erickson, Zackory and Clever, Henry M and Gangaram, Vamsee and Turk, Greg and Liu, C Karen and Kemp, Charles C},

booktitle={2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR)},

pages={224--231},

year={2019},

organization={IEEE}

}

Robotic assistance presents an opportunity to benefit the lives of many people with physical disabilities, yet accurately sensing the human body and tracking human motion remain difficult for robots. We present a multidimensional capacitive sensing technique that estimates the local pose of a human limb in real time. A key benefit of this sensing method is that it can sense the limb through opaque materials, including fabrics and wet cloth. Our method uses a multielectrode capacitive sensor mounted to a robot's end effector. A neural network model estimates the position of the closest point on a person's limb and the orientation of the limb's central axis relative to the sensor's frame of reference. These pose estimates enable the robot to move its end effector with respect to the limb using feedback control. We demonstrate that a PR2 robot can use this approach with a custom six electrode capacitive sensor to assist with two activities of daily living—dressing and bathing. The robot pulled the sleeve of a hospital gown onto able-bodied participants' right arms, while tracking human motion. When assisting with bathing, the robot moved a soft wet washcloth to follow the contours of able-bodied participants' limbs, cleaning their surfaces. Overall, we found that multidimensional capacitive sensing presents a promising approach for robots to sense and track the human body during assistive tasks that require physical human-robot interaction.

|

|

Zackory Erickson,

Nathan Luskey,

Sonia Chernova,

and Charles C. Kemp

RA-L, 2019

(Best Paper Award in Service Robotics finalist at ICRA 2019)

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@article{erickson2019classification,

title={Classification of household materials via spectroscopy},

author={Erickson, Zackory and Luskey, Nathan and Chernova, Sonia and Kemp, Charles C},

journal={IEEE Robotics and Automation Letters},

volume={4},

number={2},

pages={700--707},

year={2019},

publisher={IEEE}

}

Recognizing an object's material can inform a robot on the object's fragility or appropriate use. To estimate an object's material during manipulation, many prior works have explored the use of haptic sensing. In this paper, we explore a technique for robots to estimate the materials of objects using spectroscopy. We demonstrate that spectrometers provide several benefits for material recognition, including fast response times and accurate measurements with low noise. Furthermore, spectrometers do not require direct contact with an object. To explore this, we collected a dataset of spectral measurements from two commercially available spectrometers during which a robotic platform interacted with 50 flat material objects, and we show that a neural network model can accurately analyze these measurements. Due to the similarity between consecutive spectral measurements, our model achieved a material classification accuracy of 94.6% when given only one spectral sample per object. Similar to prior works with haptic sensors, we found that generalizing material recognition to new objects posed a greater challenge, for which we achieved an accuracy of 79.1% via leave-one-object-out cross-validation. Finally, we demonstrate how a PR2 robot can leverage spectrometers to estimate the materials of everyday objects found in the home. From this work, we find that spectroscopy poses a promising approach for material classification during robotic manipulation.

|

|

Ariel Kapusta,

Zackory Erickson,

Henry M. Clever,

Wenhao Yu,

C. Karen Liu,

Greg Turk,

and Charles C. Kemp

Autonomous Robots, 2019

[PDF]

[Bibtex]

[Abstract]

[Video]

@article{kapusta2019personalized,

title={Personalized collaborative plans for robot-assisted dressing via optimization and simulation},

author={Kapusta, Ariel and Erickson, Zackory and Clever, Henry M and Yu, Wenhao and Liu, C Karen and Turk, Greg and Kemp, Charles C},

journal={Autonomous Robots},

volume={43},

number={8},

pages={2183--2207},

year={2019},

publisher={Springer}

}

Robots could be a valuable tool for helping with dressing but determining how a robot and a person with disabilities can collaborate to complete the task is challenging. We present task optimization of robot-assisted dressing (TOORAD), a method for generating a plan that consists of actions for both the robot and the person. TOORAD uses a multilevel optimization framework with heterogeneous simulations. The simulations model the physical interactions between the garment and the person being dressed, as well as the geometry and kinematics of the robot, human, and environment. Notably, the models for the human are personalized for an individual's geometry and physical capabilities. TOORAD searches over a constrained action space that interleaves the motions of the person and the robot with the person remaining still when the robot moves and vice versa. In order to adapt to real-world variation, TOORAD incorporates a measure of robot dexterity in its optimization, and the robot senses the person's body with a capacitive sensor to adapt its planned end effector trajectories. To evaluate TOORAD and gain insight into robot-assisted dressing, we conducted a study with six participants with physical disabilities who have difficulty dressing themselves. In the first session, we created models of the participants and surveyed their needs, capabilities, and views on robot-assisted dressing. TOORAD then found personalized plans and generated instructional visualizations for four of the participants, who returned for a second session during which they successfully put on both sleeves of a hospital gown with assistance from the robot. Overall, our work demonstrates the feasibility of generating personalized plans for robot-assisted dressing via optimization and physics-based simulation.

|

|

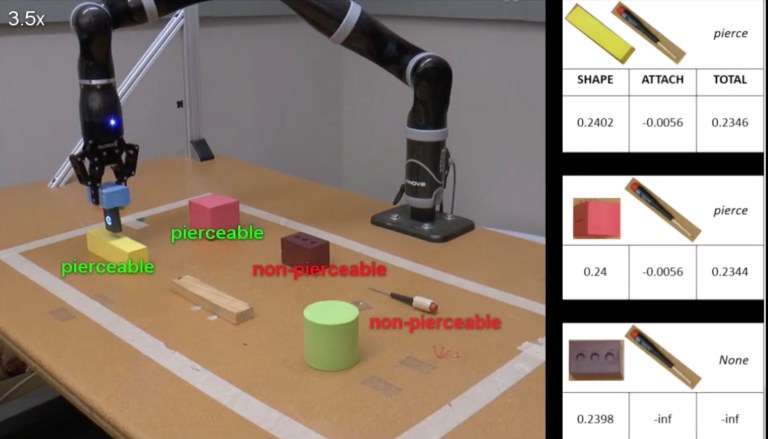

Lakshmi Nair,

Nithin Srikanth,

Zackory Erickson,

and Sonia Chernova

RSS, 2019

[PDF]

[Bibtex]

[Abstract]

[Video]

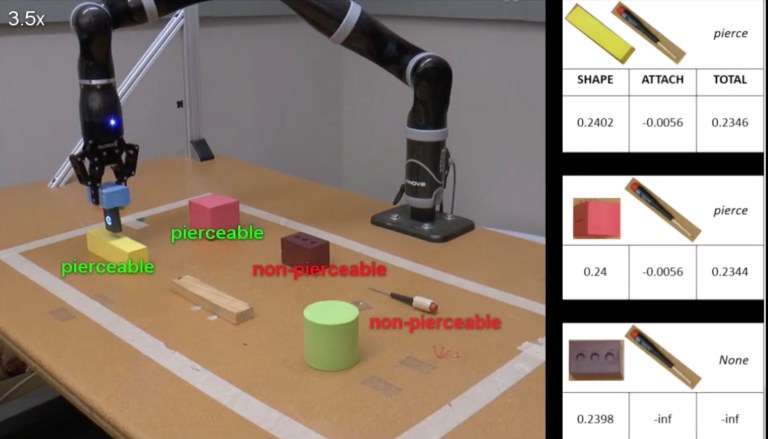

@inproceedings{nair2019autonomous,

title={Multidimensional capacitive sensing for robot-assisted dressing and bathing},

title={Autonomous Tool Construction Using Part Shape and Attachment Prediction.},

author={Nair, Lakshmi and Erickson, Zackory M and Chernova, Sonia}

year={2019}

}

This work explores the problem of robot tool construction - creating tools from parts available in the environment. We advance the state-of-the-art in robotic tool construction by introducing an approach that enables the robot to construct a wider range of tools with greater computational efficiency. Specifically, given an action that the robot wishes to accomplish and a set of building parts available to the robot, our approach reasons about the shape of the parts and potential ways of attaching them, generating a ranking of part combinations that the robot then uses to construct and test the target tool. We validate our approach on the construction of five tools using a physical 7-DOF robot arm.

|

|

Zackory Erickson,

Henry M. Clever,

Greg Turk,

C. Karen Liu,

and Charles C. Kemp

ICRA, 2018

[PDF]

[Bibtex]

[Abstract]

[Video]

@inproceedings{erickson2018deep,

title={Deep haptic model predictive control for robot-assisted dressing},

author={Erickson, Zackory and Clever, Henry M and Turk, Greg and Liu, C Karen and Kemp, Charles C},

booktitle={2018 IEEE international conference on robotics and automation (ICRA)},

pages={4437--4444},

year={2018},

organization={IEEE}

}

Robot-assisted dressing offers an opportunity to benefit the lives of many people with disabilities, such as some older adults. However, robots currently lack common sense about the physical implications of their actions on people. The physical implications of dressing are complicated by non-rigid garments, which can result in a robot indirectly applying high forces to a person's body. We present a deep recurrent model that, when given a proposed action by the robot, predicts the forces a garment will apply to a person's body. We also show that a robot can provide better dressing assistance by using this model with model predictive control. The predictions made by our model only use haptic and kinematic observations from the robot's end effector, which are readily attainable. Collecting training data from real world physical human-robot interaction can be time consuming, costly, and put people at risk. Instead, we train our predictive model using data collected in an entirely self-supervised fashion from a physics-based simulation. We evaluated our approach with a PR2 robot that attempted to pull a hospital gown onto the arms of 10 human participants. With a 0.2s prediction horizon, our controller succeeded at high rates and lowered applied force while navigating the garment around a persons fist and elbow without getting caught. Shorter prediction horizons resulted in significantly reduced performance with the sleeve catching on the participants' fists and elbows, demonstrating the value of our model's predictions. These behaviors of mitigating catches emerged from our deep predictive model and the controller objective function, which primarily penalizes high forces.

|

|

Zackory Erickson,

Maggie Collier,

Ariel Kapusta,

and Charles C. Kemp

RA-L, 2018

[PDF]

[Bibtex]

[Abstract]

[Video]

@article{erickson2018tracking,

title={Tracking human pose during robot-assisted dressing using single-axis capacitive proximity sensing},

author={Erickson, Zackory and Collier, Maggie and Kapusta, Ariel and Kemp, Charles C},

journal={IEEE Robotics and Automation Letters},

volume={3},

number={3},

pages={2245--2252},

year={2018},

publisher={IEEE}

}

Dressing is a fundamental task of everyday living and robots offer an opportunity to assist people with motor impairments. While several robotic systems have explored robot-assisted dressing, few have considered how a robot can manage errors in human pose estimation, or adapt to human motion in real time during dressing assistance. In addition, estimating pose changes due to human motion can be challenging with vision-based techniques since dressing is often intended to visually occlude the body with clothing. We present a method to track a person's pose in real time using capacitive proximity sensing. This sensing approach gives direct estimates of distance with low latency, has a high signal-to-noise ratio, and has low computational requirements. Using our method, a robot can adjust for errors in the estimated pose of a person and physically follow the contours and movements of the person while providing dressing assistance. As part of an evaluation of our method, the robot successfully pulled the sleeve of a hospital gown and a cardigan onto the right arms of 10 human participants, despite arm motions and large errors in the initially estimated pose of the person's arm. We also show that a capacitive sensor is unaffected by visual occlusion of the body and can sense a person's body through cotton clothing.

|

|

Henry M. Clever,

Ariel Kapusta,

Daehyung Park,

Zackory Erickson,

Yash Chitalia,

and Charles C. Kemp

IROS, 2018

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@inproceedings{clever20183d,

title={3d human pose estimation on a configurable bed from a pressure image},

author={Clever, Henry M and Kapusta, Ariel and Park, Daehyung and Erickson, Zackory and Chitalia, Yash and Kemp, Charles C},

booktitle={2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={54--61},

year={2018},

organization={IEEE}

}

Robots have the potential to assist people in bed, such as in healthcare settings, yet bedding materials like sheets and blankets can make observation of the human body difficult for robots. A pressure-sensing mat on a bed can provide pressure images that are relatively insensitive to bedding materials. However, prior work on estimating human pose from pressure images has been restricted to 2D pose estimates and flat beds. In this work, we present two convolutional neural networks to estimate the 3D joint positions of a person in a configurable bed from a single pressure image. The first network directly outputs 3D joint positions, while the second outputs a kinematic model that includes estimated joint angles and limb lengths. We evaluated our networks on data from 17 human participants with two bed configurations: supine and seated. Our networks achieved a mean joint position error of 77 mm when tested with data from people outside the training set, outperforming several baselines. We also present a simple mechanical model that provides insight into ambiguity associated with limbs raised off of the pressure mat, and demonstrate that Monte Carlo dropout can be used to estimate pose confidence in these situations. Finally, we provide a demonstration in which a mobile manipulator uses our network's estimated kinematic model to reach a location on a person's body in spite of the person being seated in a bed and covered by a blanket.

|

|

Zackory Erickson,

Sonia Chernova,

and Charles C. Kemp

CoRL, 2017

[PDF]

[Code]

[Bibtex]

[Abstract]

@inproceedings{erickson2017semi,

title={Semi-supervised haptic material recognition for robots using generative adversarial networks},

author={Erickson, Zackory and Chernova, Sonia and Kemp, Charles C},

booktitle={Conference on Robot Learning},

pages={157--166},

year={2017},

organization={PMLR}

}

Material recognition enables robots to incorporate knowledge of material properties into their interactions with everyday objects. For example, material recognition opens up opportunities for clearer communication with a robot, such as "bring me the metal coffee mug", and recognizing plastic versus metal is crucial when using a microwave or oven. However, collecting labeled training data with a robot is often more difficult than unlabeled data. We present a semi-supervised learning approach for material recognition that uses generative adversarial networks (GANs) with haptic features such as force, temperature, and vibration. Our approach achieves state-of-the-art results and enables a robot to estimate the material class of household objects with ∼90% accuracy when 92% of the training data are unlabeled. We explore how well this approach can recognize the material of new objects and we discuss challenges facing generalization. To motivate learning from unlabeled training data, we also compare results against several common supervised learning classifiers. In addition, we have released the dataset used for this work which consists of time-series haptic measurements from a robot that conducted thousands of interactions with 72 household objects.

|

|

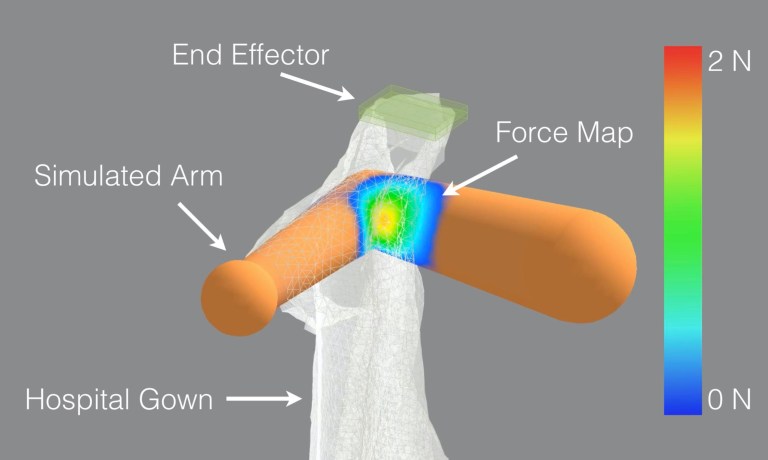

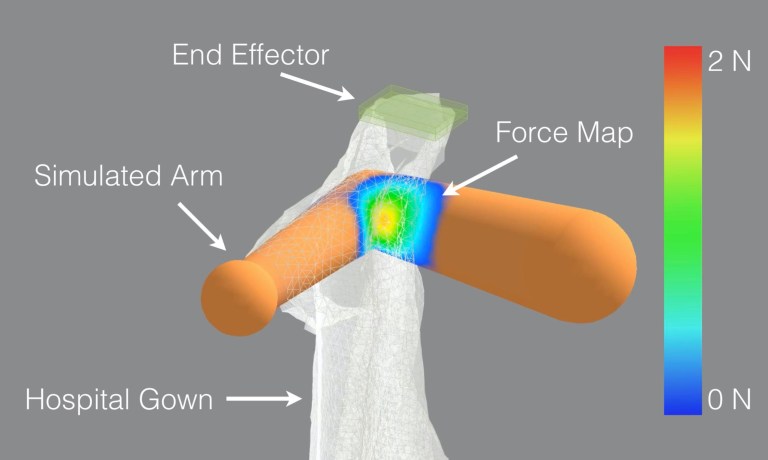

Zackory Erickson,

Alexander Clegg,

Wenhao Yu,

Greg Turk,

C. Karen Liu,

and Charles C. Kemp

ICRA, 2017

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@inproceedings{erickson2017does,

title={What does the person feel? learning to infer applied forces during robot-assisted dressing},

author={Erickson, Zackory and Clegg, Alexander and Yu, Wenhao and Turk, Greg and Liu, C Karen and Kemp, Charles C},

booktitle={2017 IEEE International Conference on Robotics and Automation (ICRA)},

pages={6058--6065},

year={2017},

organization={IEEE}

}

During robot-assisted dressing, a robot manipulates a garment in contact with a person's body. Inferring the forces applied to the person's body by the garment might enable a robot to provide more effective assistance and give the robot insight into what the person feels. However, complex mechanics govern the relationship between the robot's end effector and these forces. Using a physics-based simulation and data-driven methods, we demonstrate the feasibility of inferring forces across a person's body using only end effector measurements. Specifically, we present a long short-term memory (LSTM) network that at each time step takes a 9-dimensional input vector of force, torque, and velocity measurements from the robot's end effector and outputs a force map consisting of hundreds of inferred force magnitudes across the person's body. We trained and evaluated LSTMs on two tasks: pulling a hospital gown onto an arm and pulling shorts onto a leg. For both tasks, the LSTMs produced force maps that were similar to ground truth when visualized as heat maps across the limbs. We also evaluated their performance in terms of root-mean-square error. Their performance degraded when the end effector velocity was increased outside the training range, but generalized well to limb rotations. Overall, our results suggest that robots could learn to infer the forces people feel during robot-assisted dressing, although the extent to which this will generalize to the real world remains an open question.

|

|

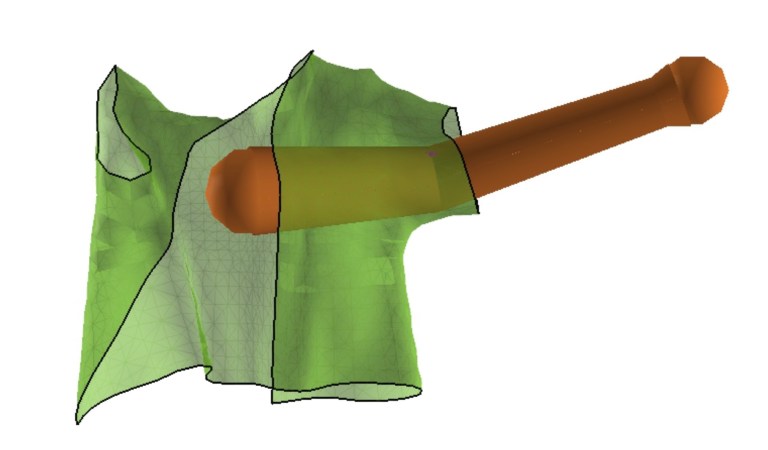

Alexander Clegg,

Wenhao Yu,

Zackory Erickson,

C. Karen Liu,

and Greg Turk

IROS, 2017

[PDF]

[Bibtex]

[Abstract]

[Video]

@inproceedings{clegg2017learning,

title={Learning to navigate cloth using haptics},

author={Clegg, Alexander and Yu, Wenhao and Erickson, Zackory and Tan, Jie and Liu, C Karen and Turk, Greg},

booktitle={2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={2799--2805},

year={2017},

organization={IEEE}

}

We present a controller that allows an armlike manipulator to navigate deformable cloth garments in simulation through the use of haptic information. The main challenge of such a controller is to avoid getting tangled in, tearing or punching through the deforming cloth. Our controller aggregates force information from a number of haptic-sensing spheres all along the manipulator for guidance. Based on haptic forces, each individual sphere updates its target location, and the conflicts that arise between this set of desired positions is resolved by solving an inverse kinematic problem with constraints. Reinforcement learning is used to train the controller for a single haptic-sensing sphere, where a training run is terminated (and thus penalized) when large forces are detected due to contact between the sphere and a simplified model of the cloth. In simulation, we demonstrate successful navigation of a robotic arm through a variety of garments, including an isolated sleeve, a jacket, a shirt, and shorts. Our controller out-performs two baseline controllers: one without haptics and another that was trained based on large forces between the sphere and cloth, but without early termination.

|

|

Daehyung Park,

Hokeun Kim,

Yuuna Hoshi,

Zackory Erickson,

Ariel Kapusta,

and Charles C. Kemp

IROS, 2017

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@inproceedings{park2017multimodal,

title={A multimodal execution monitor with anomaly classification for robot-assisted feeding},

author={Park, Daehyung and Kim, Hokeun and Hoshi, Yuuna and Erickson, Zackory and Kapusta, Ariel and Kemp, Charles C},

booktitle={2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={5406--5413},

year={2017},

organization={IEEE}

}

Activities of daily living (ADLs) are important for quality of life. Robotic assistance offers the opportunity for people with disabilities to perform ADLs on their own. However, when a complex semi-autonomous system provides real-world assistance, occasional anomalies are likely to occur. Robots that can detect, classify and respond appropriately to common anomalies have the potential to provide more effective and safer assistance. We introduce a multimodal execution monitor to detect and classify anomalous executions when robots operate near humans. Our system builds on our past work on multimodal anomaly detection. Our new monitor classifies the type and cause of common anomalies using an artificial neural network. We implemented and evaluated our execution monitor in the context of robot-assisted feeding with a general-purpose mobile manipulator. In our evaluations, our monitor outperformed baseline methods from the literature. It succeeded in detecting 12 common anomalies from 8 able-bodied participants with 83% accuracy and classifying the types and causes of the detected anomalies with 90% and 81% accuracies, respectively. We then performed an in-home evaluation with Henry Evans, a person with severe quadriplegia. With our system, Henry successfully fed himself while the monitor detected, classified the types, and classified the causes of anomalies with 86%, 90%, and 54% accuracy, respectively.

|

|

Daehyung Park,

Zackory Erickson,

Tapomayukh Bhattacharjee,

and Charles C. Kemp

ICRA, 2016

[PDF]

[Code]

[Bibtex]

[Abstract]

[Video]

@inproceedings{park2016multimodal,

title={Multimodal execution monitoring for anomaly detection during robot manipulation},

author={Park, Daehyung and Erickson, Zackory and Bhattacharjee, Tapomayukh and Kemp, Charles C},

booktitle={2016 IEEE International Conference on Robotics and Automation (ICRA)},

pages={407--414},

year={2016},

organization={IEEE}

}

Online detection of anomalous execution can be valuable for robot manipulation, enabling robots to operate more safely, determine when a behavior is inappropriate, and otherwise exhibit more common sense. By using multiple complementary sensory modalities, robots could potentially detect a wider variety of anomalies, such as anomalous contact or a loud utterance by a human. However, task variability and the potential for false positives make online anomaly detection challenging, especially for long-duration manipulation behaviors. In this paper, we provide evidence for the value of multimodal execution monitoring and the use of a detection threshold that varies based on the progress of execution. Using a data-driven approach, we train an execution monitor that runs in parallel to a manipulation behavior. Like previous methods for anomaly detection, our method trains a hidden Markov model (HMM) using multimodal observations from non-anomalous executions. In contrast to prior work, our system also uses a detection threshold that changes based on the execution progress. We evaluated our approach with haptic, visual, auditory, and kinematic sensing during a variety of manipulation tasks performed by a PR2 robot. The tasks included pushing doors closed, operating switches, and assisting able-bodied participants with eating yogurt. In our evaluations, our anomaly detection method performed substantially better with multimodal monitoring than single modality monitoring. It also resulted in more desirable ROC curves when compared with other detection threshold methods from the literature, obtaining higher true positive rates for comparable false positive rates.

|